Wow, the last time I really used the Elastic Stack it was called the ELK stack, and it was version 2.0. A lot of things have changed since then, so I am going to do an updated post on installing and setting up the Elastic stack.

Goals

- Learn all the components of the Elastic stack

- Learn the logging architecture/pipeline

- Setup the Elastic stack manually or with Docker

- Create visualizations and dashboards in Kibana

- Ingest Zeek logs into Elastic stack

Elastic stack terms

- Documents – A basic set of data that can be indexed. An example is a line in a log file.

- Type – Allows you to assign types to documents in an index and the types are defined by the user. For instance, if we are collecting logs from Zeek in an index we may assign a type for DNS logs and another type for conn logs.

- Indexes – A collection of documents that have similar characteristics.

- Shard – Shard is the process of separating a large index into multiple pieces. These shards may be distributed among an Elasticsearch cluster.

Helpful curl commands for Elasticsearch

curl http://localhost:9200– Status of ES

curl -X GET "http://127.0.0.1:9200/_cat/indices?v"– List all the indexes in ES

curl -XDELETE localhost:9200/<INDEX>– Delete index

Logging pipeline explained

One of the most important things to understand is how the logging pipeline operates at a high level. The high-level overview helps beginners understand how all the components of the Elastic stack work together. In the beginning of out our diagram above (left), we have the “LOG” phase, which is actually two functions in one. The first function is the producer of logs such as MySQL, Zeek, NGINX, etc. The second function is the logging client (Filebeat, Rsyslog, syslog-ng) that reads these log files and ships them to Elastic stack. For this blog post, we are going to focus on using Filebeat to ship logs because it is log shipper created and maintained by Elastic.

The next phase is Logastash where the logs are ingested and transformed into the Elastic stack. There are three stages within the Logstash pipeline which are inputs, filters, and outputs. Logstash supports an array of ingestion plugins called inputs which include Beats, Syslog, Kafka, Amazon S3, and more can be found here. Logstash has the ability to transform data/filter logs such as rename fields from destination_ip to dst_ip or the ability to enrich a value from 1.2.3.4 to an FQDN of test.example.com. Lastly, Logstash can output data to an array of platforms such as Elasticsearch(covered in this blog post), Kafka, MongoDB, and more can be found here.

This blog post will focus on using Elasticsearch as our “long term storage” solution. Elasticsearch is the heart of the entire stack! Elasticsearch is a text-based search engine so you can give it a term like “foo”, and it will search all its logs for the keyword “foo”.

The last phase is Kibana where you can visually search and create meaningful dashboards of the data within Elasticsearch. Later in this post, we will be creating a dashboard to visualize the Zeek data within Elasticsearch. This dashboard will contain widgets such as the top 10 most queried domains within the environment, top 10 file hashes seen on the network, a map of all the GeoIP correlated IP addresses, and a time series graph of the amount of traffic in/out of the network. Finally, Kibana and Elasticsearch work together to allow for granular searches, more info can be found here.

Install/Setup Elastic stack 7.0 on Ubuntu 18.04

Manual install

Add Elastic repo

sudo suapt-get update -yapt-get install apt-transport-https -ywget -qO - https://artifacts.elastic.co/GPG-KEY-elasticsearch | sudo apt-key add -echo "deb https://artifacts.elastic.co/packages/7.x/apt stable main" | sudo tee -a /etc/apt/sources.list.d/elastic-7.x.listapt-get update -y

Install/Setup Java

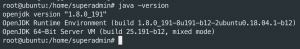

apt-get update -yapt install openjdk-8-jdkjava -version

Install/Setup Elasticsearch

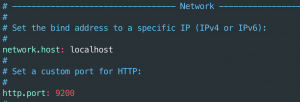

apt-get install elasticsearchsed -i 's/#network.host: 192.168.0.1/network.host: localhost/g' /etc/elasticsearch/elasticsearch.ymlsed -i 's/#http.port: 9200/http.port: 9200/g' /etc/elasticsearch/elasticsearch.yml

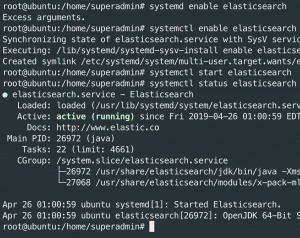

systemctl enable elasticsearchsystemctl start elasticsearchsystemctl status elasticsearch

Test Elasticsearch

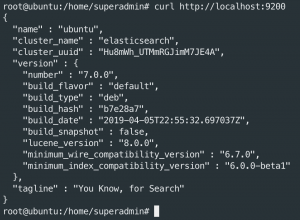

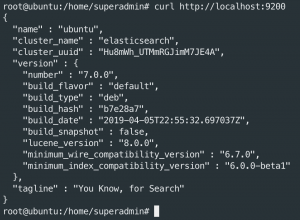

curl http://localhost:9200

Install/Setup Logstash

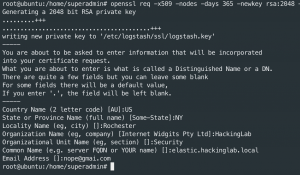

apt-get install logstash -ymkdir /etc/logstash/sslopenssl req -x509 -nodes -days 365 -newkey rsa:2048 -keyout /etc/logstash/ssl/logstash.key -out /etc/logstash/ssl/logstash.crtwget https://raw.githubusercontent.com/CptOfEvilMinions/BlogProjects/master/ElasticStackv7/configs/logstash/pipeline/02-input-beats.conf -O /etc/logstash/conf.d/02-input-beats.conf

################################################################# # Inputs are used to ingest logs from remote logging clients ################################################################# input { # Ingest logs that match the Beat template beats { # Accept connections on port 5044 port => 5044 # Accept SSL connections ssl => true # Public cert files ssl_certificate => "/etc/logstash/ssl/logstash.crt" ssl_key => "/etc/logstash/ssl/logstash.key" # Do not verify client ssl_verify_mode => "none" } }wget https://raw.githubusercontent.com/CptOfEvilMinions/BlogProjects/master/ElasticStackv7/configs/logstash/pipeline/10-filter-beats.conf -O /etc/logstash/conf.d/10-filter-beats.conf

################################################################# # Filters are used to transform and modify the logs ################################################################# filter { # Only apply these transformations to logs that contain the "Zeek" tag if "zeek" in [tags] { # Extract the json into Key value pairs json { source => "message" } # Remove the message field because it was extracted above mutate { remove_field => ["message"] } # Rename field names mutate { rename => ["id.orig_h", "src_ip" ] rename => ["id.orig_p", "src_port" ] rename => ["id.resp_h", "dst_ip" ] rename => ["id.resp_p", "dst_port" ] rename => ["host.name", "hostname" ] } } }wget https://raw.githubusercontent.com/CptOfEvilMinions/BlogProjects/master/ElasticStackv7/configs/logstash/pipeline/20-output-elasticsearch.conf -O /etc/logstash/conf.d/20-elasticsearch-output.conf

################################################################# # Outputs take the logs and output them to a long term storage ################################################################# output { # Send logs that contain the zeek tag too if "zeek" in [tags] { # Outputting logs to elasticsearch elasticsearch { # ES host to send logs too hosts => ["http://localhost:9200"] # Index to store data in index => "zeek-%{+YYYY.MM.dd}" } } }systemctl enable logstashsystemctl start logstash

Install/Setup Kibana

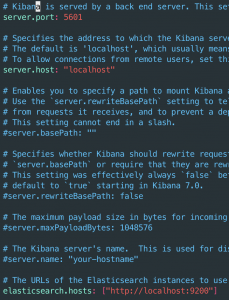

apt-get install kibanased -i 's/#server.host: "localhost"/server.host: "localhost"/g' /etc/kibana/kibana.ymlsed -i 's/#server.port: 5601/server.port: 5601/g' /etc/kibana/kibana.ymlsed -i 's?#elasticsearch.hosts:?elasticsearch.hosts:?g' /etc/kibana/kibana.yml

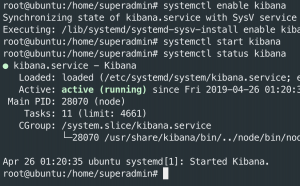

systemctl enable kibanasystemctl start kibanasystemctl status kibana

Install/Setup Nginx

apt-get install nginxmkdir /etc/nginx/sslopenssl req -x509 -nodes -days 365 -newkey rsa:2048 -keyout /etc/nginx/ssl/nginx.key -out /etc/nginx/ssl/nginx.crt

openssl dhparam -out /etc/nginx/ssl/dhparam.pem 4096- This will take a while ~10-20mins

wget https://raw.githubusercontent.com/CptOfEvilMinions/BlogProjects/master/ElasticStackv7/configs/nginx/nginx.conf -O /etc/nginx/nginx.confwget kibana.conf https://raw.githubusercontent.com/CptOfEvilMinions/BlogProjects/master/ElasticStackv7/configs/nginx/kibana.conf -O /etc/nginx/conf.d/kibana.confsed -i 's#server_name kibana;#server_name <FQDN of Kibana>;#g' /etc/nginx/conf.d/kibana.conf

systemctl enable nginxsystemctl restart nginx

Test Kibana and Nginx

- Open a web browser

- Browse to https://<IP addr or FQDN of server>

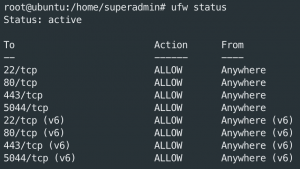

Configure UFW

ufw enableufw allow sshufw allow httpufw allow httpsufw allow 5044/tcpufw reloadufw status

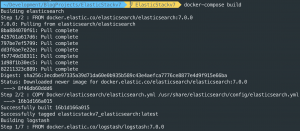

Deploy Elastic stack via Docker

openssl req -x509 -nodes -days 3650 -newkey rsa:2048 -keyout conf/ssl/docker.key -out conf/ssl/docker.crt- Generate TLS certs

openssl dhparam -out conf/ssl/dhparam.pem 2048docker-compose build

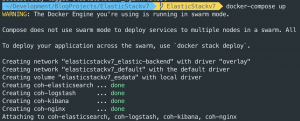

docker-compose up -d

docker ps

docker stats

Ship Zeek logs with Filebeat

Add Elastic repo

sudo suapt-get update -yapt-get install apt-transport-https -ywget -qO - https://artifacts.elastic.co/GPG-KEY-elasticsearch | sudo apt-key add -echo "deb https://artifacts.elastic.co/packages/7.x/apt stable main" | sudo tee -a /etc/apt/sources.list.d/elastic-7.x.listapt-get update -y

Install/Setup Filebeat on Ubuntu 18.04

apt-get install filebeat -ymkdir /etc/filebeat/ssl- SCP /etc/logstash/ssl/logstash.crt from the Logstash server to filebeat client at /etc/filebeat/ssl/logstash.crt

wget https://raw.githubusercontent.com/CptOfEvilMinions/BlogProjects/master/ElasticStackv7/configs/filebeat/filebeat.yml -O /etc/filebeat/filebeat.yml############### Filebeat Configuration Example ############### #=================== Filebeat inputs ============= filebeat.inputs: # Each - is an input. Most options can be set at the input level, so # you can use different inputs for various configurations. # Below are the input specific configurations. - type: log # Change to true to enable this input configuration. enabled: true # Paths that should be crawled and fetched. Glob based paths. paths: - /opt/bro/logs/current/*.log #=================== General ============= # The name of the shipper that publishes the network data. It can be used to group # all the transactions sent by a single shipper in the web interface. #name: # The tags of the shipper are included in their own field with each # transaction published. tags: ["zeek"] # Optional fields that you can specify to add additional information to the # output. #fields: # env: staging #=================== Outputs ============= #------------------- Logstash output ----- output.logstash: # The Logstash hosts hosts: ["localhost:5044"] # Optional SSL. By default is off. # List of root certificates for HTTPS server verifications #ssl.certificate_authorities: ["/etc/pki/root/ca.pem"] # Certificate for SSL client authentication #ssl.certificate: "/etc/filebeat/ssl/logstash.crt" # Client Certificate Key #ssl.key: "/etc/pki/client/cert.key"sed -i 's#"localhost:5044"#"<IP addr or FQDN of Logstash>:5044"#g' /etc/filebeat/filebeat.ymlsystemctl enable filebeatsystemctl start filebeatsystemctl status filebeat

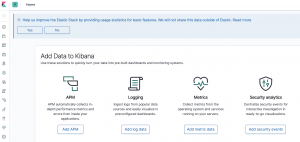

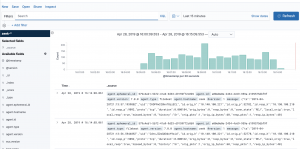

Configure/Utilizing Kibana

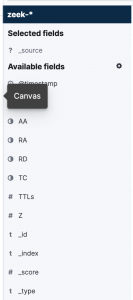

Create Kibanna index pattern

- Open a web browser

- Browse to https://<IP addr or FQDN of server>

- Select the Discover icon in the top left – looks like a compass

- Create index pattern: Define index pattern

- Enter “zeek-*” into “index pattern”

- Select “Next step”

- Enter “zeek-*” into “index pattern”

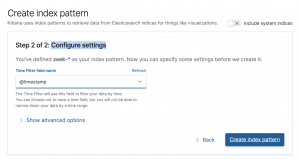

- Create index pattern: Configure settings

- Select “@timestamp” for “time filter field name”

- Select “Create index pattern”

- Select “@timestamp” for “time filter field name”

- Select the Discover icon in the top left – looks like a compass

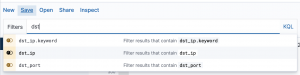

Searching with Kibana

Operators

ANDNOTOR:– equals:*– key exists

Example searches

The search bar helps you form queries as you type.

- Search as you type

- Search term

- operator

- Search term

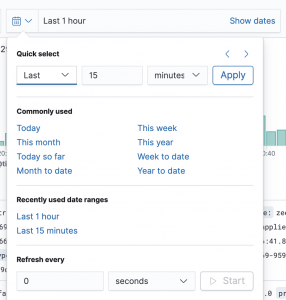

- Search with time frame

- Add field attributes – Hover over a field and select “Add”

-

dst_ip:208.67.220.220- Search for a specific IP address in the Zeek index

- Search for a specific IP address in the Zeek index

dst_ip:208.67.220.*- Search for an IP address using a wildcard in the Zeek index

NOT dst_ip: 208.67.220.220- Search for any IP address not 208.67.220.220 in the Zeek index

- Search for any IP address not 208.67.220.220 in the Zeek index

dst_ip: 208.67.220.220 and dst_port : 53- AND operator

- AND operator

dst_ip: 208.67.220.220 OR dst_ip:208.67.222.222- OR operator

- OR operator

Create visualizations

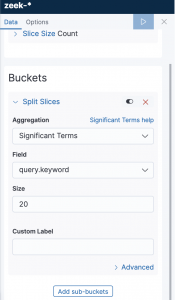

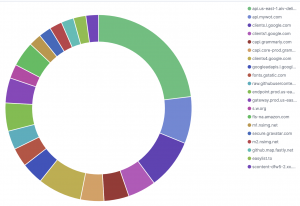

Create top 20 domains

- Select “Visualize”

- Select “Create a visualization”

- Select “Pie”

- Select “Split slices”

- Select

Significant termsfor aggregation - Select

query.keywordfor field - Enter

20

- Select “Save”

- Enter “top 20 domains”

- Select “Confirm save”

- Select

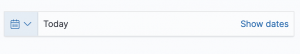

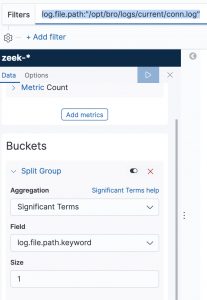

Create a connection log counter

- Select “Visualize”

- Select “+” in the top right

- Select “Metric”

- Select “Split group”

- Set timeframe to “Today” in top right

- Select

Significant termsfor aggregation - Select

log.file.path.keywordfor field - Enter

1into Size - Enter

log.file.path:"/opt/bro/logs/current/conn.log"into filter

- Select “Save”

- Enter “Connection counter” for the name

- Select “Confirm save”

- Set timeframe to “Today” in top right

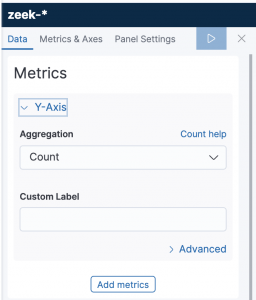

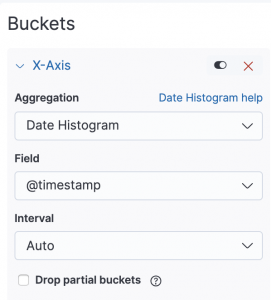

Create histogram

- Select “Visualize”

- Select “+” in the top right

- Select “Vertical bar”

- Expand Y-axis

- Select

Countfor “Aggregation”

- Select

- Expand X-axis

- Select

Date Histogramfor Aggregation - Select

@timestampfor Field - Select

Autofor Interval

- Select

- Select “Save”

- Enter “Zeek Histrogram” for the title

- Select “Confirm save”

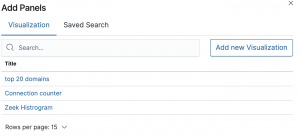

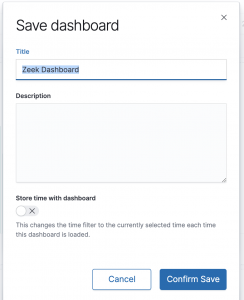

Create Zeek dashboard

- Select “Dashboard” on the left

- Select “Create new dashboard”

- Select “Add” in the top left

- Select each visualization

- Select “X” in top right

- Select “Save” in top right

- Enter “Zeek Dashboard”

- Select “Confirm save”

Hello,

Great post. I am having a problem with the SSL on filebeats to logstash.

Getting this error:

2019-08-18T12:28:50.705-0400 ERROR instance/beat.go:877 Exiting: error initializing publisher: key file not configured accessing ‘output.logstash.ssl’ (source:’/etc/filebeat/filebeat.yml’)

Exiting: error initializing publisher: key file not configured accessing ‘output.logstash.ssl’ (source:’/etc/filebeat/filebeat.yml’)

If I comment out ssl.certificate: “/etc/filebeat/ssl/logstash.crt”

it connects but fails the ssl on logstash.

Any Ideas on this one?

The error should be fixed in my new release.

Followed line by line and run into paser errors:

[WARN ][logstash.filters.json ] Error parsing json {:source=>”message”, :raw=>”…….

What is the issue? I used your config files.

The error should be fixed in my new release.

Thank you so much for this! Got a lot of hiccups I was encountering with older guides figured out thanks to your write-up!

I’ve been trying to setup a new ELK Ubuntu server, but I get stuck in your guide around nginx, looks like the files are gone on your github?

Hey Stephane,

please see my updated blog post here: https://holdmybeersecurity.com/2021/01/27/ir-tales-the-quest-for-the-holy-siem-elastic-stack-sysmon-osquery/