As the title suggests, learning Kubernetes (k8s) was your typical hero’s journey for me. I set out on an adventure to conquer all there is to know about the Kubernetes beast. Alas my attempts were thwarted by the frustratingly vast chasm of fire that is Kubernetes lair. Like any good hero, I sharpened my wit and sought a wealth of knowledge from an infinite library until I happened upon a powerful Wizard who’s guidance lead me, to finally overcome the beast’s lair.

This blog post, like the millions of blog posts that already exist on the internet will provide instructions on how to set up a MicroK8s cluster; however, I will also provide helpful additions to enable you in using Kubernetes in a homelab environment. Hopefully providing this foundational services guide, or “Act 2” of learning Kubernetes will provide a stronger understanding so that you feel empowered to go and explore more applications on your own. The additions I found to be foundational in my homelab are: using your Synology device as persistent storage, creating an ingress controller/load balancer using Traefik + cert-manager, deploying our first web app (Cyberchef), and how to monitor our cluster with Prometheus + Grafana. I will also recite heroic tales of overcoming the tribulations I encountered along the way. My hope is that this blog post will help provide a bootstrapped cluster with the capabilities that will enable beginners learning Kubernetes to continue their own heroic journey.

Goals

- Create a single instance MicroK8s “cluster”

- Create a pfSense DHCP lease pool

- Create a DNS zone using FreeIPA to access k8s services via an FQDN

- Setup

kubectlon remote macOS - Spin up MetalLB to provision IP addresses

- Setup Traefik load balancer + cert-manager

- Spin up Cyberchef with a Traefik ingress route

- Setup persistent storage using local host storage or Synology

- Setup Prometheus and Grafana for metrics

Requirements

- FreeIPA with ability to create a DNS zone

- pfSense/DHCP service to setup a DHCP pool

- Ubuntu 22.04 instance

- Fundamental understanding of Helm

Act 1: Kubernetes 101 course

My blogs and my projects are a way for me to learn and give back to the community. Since k8s has become a recently adopted technology in enterprise environments I wanted to learn it and provide content on my blog using the platform. I personally tried to learn k8s on several occasions via different courses and instructors with each attempt ending with me frustratingly giving up. I personally felt that all the classes except one did not have a teaching structure that worked for me. They would try to teach all of the vocabulary and concepts up front without giving a practical application or lab immediately following it, making much of this information hard to follow. The overlapping teachings of “LEARN ALL THE THINGS”, but “we won’t use this till later”, and additionally spending hours learning to only find out that the content was outdated because k8s development is fast, made me lose interest time and time again.

After countless hours of courses, as much as I wanted to continue learning I felt that I was getting nowhere. All of these projects are done in my free time and I want to be creating impactful content in my free time! If you have experienced some combinations of these feelings/experiences while beginning to learn k8s then I HIGHLY HIGHLY HIGHLY recommend the following Udemy course: Kubernetes for the Absolute Beginners – Hands-on.

I should note that this endorsement is a personal recommendation and I do not receive any incentives. This was the first course that I COMPLETED that didn’t teach “ALL THE THINGS” but rather focused on the absolute basic building blocks for all k8s deployments. Since this class focused on the building blocks (pods, deployments, services) the information taught in each module was something I could apply in the labs at the end of each chapter. Additionally, the class provided access to a Kubernetes cluster via KodeCloud so you can focus on learning k8s and not having to provision and manage a cluster. After this course, I was writing my own k8s YAML manifest files to deploy services for my homelab. Lastly, it should be noted that this class does not teach everything there is to know about k8s. But it does provide a solid foundation which was s catalyst to me teaching myself more advanced k8s concepts – teach a man to fish.

Lastly, when I take an Udemy course I usually set a goal I would like to accomplish after said course. For Kubernetes, my goal was to host Cyberchef behind a Traefik load balancer on my homelab cluster. Completing the course above gave me the instructions on how to achieve half my goal by hosting CyberChef on my Microk8s “cluster” that was exposed using a nodeport. From that point I was able to build on those starting concepts I learned in order to complete the second half of my goal; deploy Traefik as a load balancer on my cluster, which was followed with CyberChef being hosted by Traefik.

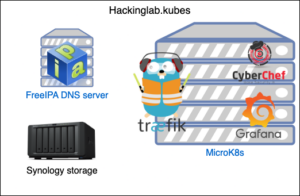

Network diagram

Background

What is Kubernetes

Kubernetes (k8s) is an open-source container-orchestration system for automating application deployment, scaling, and management. It was originally designed by Google, and is now maintained by the Cloud Native Computing Foundation. It aims to provide a “platform for automating deployment, scaling, and operations of application containers across clusters of hosts”. It works with a range of container tools, including Docker. Many cloud services offer a Kubernetes-based platform or infrastructure as a service (PaaS or IaaS) on which Kubernetes can be deployed as a platform-providing service. Many vendors also provide their own branded Kubernetes distributions.

What is MicroK8s

MicroK8s is an open-source system for automating deployment, scaling, and management of containerised applications. It provides the functionality of core Kubernetes components, in a small footprint, scalable from a single node to a high-availability production cluster. By reducing the resource commitments required in order to run Kubernetes, MicroK8s makes it possible to take Kubernetes into new environments, for example:

- turning Kubernetes into lightweight development tool

- making Kubernetes available for use in minimal environments such as GitHub CI

- adapting Kubernetes for small-appliance IoT applications

Developers use MicroK8s as an inexpensive proving ground for new ideas. In production, ISVs benefit from lower overheads and resource demands and shorter development cycles, enabling them to ship appliances faster than ever. The MicroK8s ecosystem includes dozens of useful Addons – extensions that provide additional functionality and features.

Why Microk8s?

Deciding which flavor of k8s to use for your homelab cluster is a highly debated topic on the internet. So why did I pick MicroK8s? Because I finally got it to work lol. I finally accomplished my goal of hosting Cyberchef behind a Traefik load balancer on my homelab k8s “cluster”. I tried several different flavors but due to some combination of stale configurations/tutorials, Kubernetes features rapidly evolving, and being a novice I was unsuccessful. So as the reader you might be asking “what does this have to do with me?”.

What attracted me to continue using MicroK8s is that they have pre-built modules that you can simply deploy on your cluster with a single command and zero expertise needed. For example, I tried every config and StackOverFlow post on the internet to get a working deployment of MetalLB but was unable too. MicroK8s has a MetalLB module that I was able to set up with a single command: microk8s enable metallb:<start of IP range>:<end of IP range> and it just worked! As a beginner, these modules can be super helpful to lower the bar to start using k8s in your homelab. However, as I learn more about k8s, I would prefer to have more control of my “cluster” setup and not rely on modules. Therefore at some point, I believe I will outgrow Microk8s and move to a platform like k3s where I set my own destiny.

What is Helm?

Helm is a tool that automates the creation, packaging, configuration, and deployment of Kubernetes applications by combining your configuration files into a single reusable package. Helm provides one of the most accessible solutions to this problem, making deployments more consistent, repeatable, and reliable. To simplify this concept, think of Helm charts as a blueprint to building a house. The real magic is the ability to tune the blueprint such as make the rooms bigger, add a sunroom, add a home security system, or add furniture to a room. Thus there is no need to know how to do these things but rather choosing what you want.

To provide a real world example, Gitlab has a Helm chart. You have the ability to configure the database as a single instance vs. high availability, the database password, how many instances of Gitlab to run concurrently for high availability, what ingress controller to use, and more. I don’t have any idea how to run Postgres in high availability mode on k8s but the Helm chart knows how to configure that. Thus allowing me to choose what I want but no need to know how.

Create DHCP pool via pfSense

If you plan to host services on your MicroK8s cluster, your services may require “external” (homelab) IP addresses from pfSense. This section will walk through how to set up a DHCP pool for your MicroK8s “cluster” to be used by MetalLB.

DHCP pool

- Log into pfSense

- Services > DHCP Server

- Select the appropriate tab for the network your MicroK8s exists in, mine exists in a network named

dev - Scroll down to “Additional Pool”

- Select “Add +”

- Enter an IP address range that should be reserved for your MicroK8s “cluster”

- Enter an IP address range that should be reserved for your MicroK8s “cluster”

DHCP static mapping

- Obtain the MAC address of the MicroK8s VM

- Scroll down to “DHCP Static Mappings for this Interface”

- Select “Add +”

- Enter the VM interface MAC address into MAC address

- Enter a client identifier – I use the hostname

- Enter an IP address NOT from the DHCP pool above

- Enter hostname

- Enter a description

- Save

Create DNS zone for MicroK8s using FreeIPA

Create DNS zone

- Log into FreeIPA as an administrator

- Network Services > DNS > DNS Zones

- Select “Add”

- Enter a domain into Zone name

- Select “Add”

- Enter a domain into Zone name

- Select “Settings” tab for your newly created zone

- Scroll down to “Allow transfer” section. You need to allow your Microk8s cluster to perform a zone transfer on your zone, more on this later.

- Add the IP address range(s) for your k8s cluster

Test DNS zone transfer

dig axfr <domain for k8s> @<IP addr of FreeIPA>

Install/Setup MicroK8s on Ubuntu 22.04

Install MicroK8s

- SSH into microk8s instance

sudo suapt-get update -y && apt-get upgrade -y && reboot- Install MicroK8s:

snap install microk8s --classic --channel=1.28 - Install RBAC:

microk8s enable rbac - Install DNS using FreeIPA:

microk8s enable dns:<DNS server>

Configure UFW

ufw allow 16443/tcp- Allow access to the k8s API

ufw allow in on cni0 && sudo ufw allow out on cni0ufw default allow routed

Install MetalLB

Kubernetes does not offer an implementation of network load balancers (Services of type LoadBalancer) for bare-metal clusters. The implementations of network load balancers that Kubernetes does ship with are all glue code that calls out to various IaaS platforms (GCP, AWS, Azure…). If you’re not running on a supported IaaS platform (GCP, AWS, Azure…), LoadBalancers will remain in the “pending” state indefinitely when created. Bare-metal cluster operators are left with two lesser tools to bring user traffic into their clusters, “NodePort” and “externalIPs” services. Both of these options have significant downsides for production use, which makes bare-metal clusters second-class citizens in the Kubernetes ecosystem. MetalLB aims to redress this imbalance by offering a network load balancer implementation that integrates with standard network equipment, so that external services on bare-metal clusters also “just work” as much as possible.

Without MetalLB or any similar software solution — we are talking only software solutions here — the External IP of any new created service in Kubernetes will stay indefinitely in pending state. MetalLB’s purpose is to cover this deficit by offering a network load balancer implementation that integrates with standard network equipment, so that external services on bare-metal clusters work in a similar way as their equivalents in IaaS platform providers. Thus Metallb is the service that is in charge of requesting an IP address from pfSense for services of type LoadBalancer. As you can see in the first step below we install MetalLB but we also provide a DHCP range, which should match the one created above in pfSense.

- Install MetalLB to handle MicroK8s “public” IPs for ingress:

microk8s enable metallb:<start IP addr>-<end IP addr>

microk8s kubectl get pods -n metallb-system

Test MetalLB

kubectl create deploy nginx --image nginxkubectl expose deploy nginx --port 80 --type LoadBalancer

- Open a web browser to

http://<NGINX load balancer IP addr>

- Clean up deployment:

kubectl delete svc nginx - Clean up load balancer:

kubectl delete deploy nginx

Quick Helm primer

ArtifactHub

As stated above, think of Helm charts as a blueprint to building a house. For this hands-on example we are going to deploy NGINX just like we did above but with Helm. Helm charts are hosted on a site hosted by Helm called ArtifactHub. You can browse ArtifactHub for all sorts of pre-existing charts that you could deploy on your cluster. For this example, we are just going to search “NGINX” and use the nginx chart provided by Bitnami.

values.yaml

If you select “Default values” on the right you will be presented with all the configurable options for this chart. Below is a values.yaml config file which we will use to instruct Helm how to deploy NGINX on our “cluster”. The values selected below were chosen to demonstrate how to use Helm and not for any significance. At the top, we start by specifying the image tag aka the container version of NGINX to deploy. The chart was set to version 1.25.1 by default but I wanted to show we can override the default value (version), if needed. Next, we have our service section which instructs Helm how to build the service resource for NGINX. We set the service type to LoadBalancer like done in the previous section, I statically mapped the http port to port 1234, and I specified the IP address the service should request from the DHCP pool. Next, we will deploy this Helm chart to our “cluster”.

Deploy

First, we start by adding the Bitnami repo to Helm so that we can pull the NGINX chart remotely. Second, we run the following command: helm install my-nginx bitnami/nginx -f values.yaml. Helm install lets helm know we want to deploy this chart and there should be no pre-existing deployment. Third, we specify the name of the helm deployment and in this example it is my-nginx. Fourth, we specify the Helm chart we want to deploy and in our case it is the NGINX chart provided by Bitnami. Lastly, we append -f values.yaml which instructs Helm to ingest configuration settings from that file and use them to configure the deployment.

- Install Helm

- Clone repo for this blog post:

git clone https://github.com/CptOfEvilMinions/BlogProjects.git cd BlogProjects/microk8s/nginx-helm- Add helm repo:

helm repo add bitnami https://charts.bitnami.com/bitnami - Install Helm chart:

helm install my-nginx bitnami/nginx -f values.yaml

helm ls

kubectl get svc- Ignore the IP address that was assigned outside the DHCP scope

- Ignore the IP address that was assigned outside the DHCP scope

- Query service port:

export SERVICE_PORT=$(kubectl get --namespace default -o jsonpath="{.spec.ports[0].port}" services my-nginx) - Query service IP addr:

export SERVICE_IP=$(kubectl get svc --namespace default my-nginx -o jsonpath='{.status.loadBalancer.ingress[0].ip}') curl "http://${SERVICE_IP}:${SERVICE_PORT}"

- Set the service port to

7890invalues.yaml - Update Helm deployment:

helm upgrade my-nginx bitnami/nginx -f values.yaml kubectl get svc

- Delete the helm deployment:

helm uninstall my-nginx

SOOOOOO many options

At first Helm may seem overwhelming because it provides so many configurable settings. While the settings are dependent on a chart-by-chart basis, there are a few exceptions. These exceptions are how to configure persistence for storage (if needed), how to configure ingress to access it remotely, or autoscaling settings. I also typically Google “<service> helm” and review current configs on Github, review the common parameters section on ArtifactHub, or blog posts on how to deploy the service. One of these avenues will typically provide a good starting point on what Helm settings need tuning. Lastly, context about the service may lead you to which values need to be configured. For example, how to setup LDAP auth on Gitlab via Helm.

Helm template

Helm is a layer of abstraction for generating k8s manifest files. Run the following command: helm template my-nginx bitnami/nginx -f values.yaml and review the output (screenshot below). As you can see below, Helm is generating k8s yaml to create a service with the values we provided in values.yaml. This command can be a really great way to debug and learn how to generate k8s manifest files. If you enjoy learning what happens underneath the hood, this command provides a great way to learn how to make manifest files.

Optional: Generate k8s cluster CA with FQDN

This section is optional but is a nice to have in a homelab environment. I personally don’t like using IP addresses as the primary way to interact with a service because DNS is friendlier to humans. However, by default MicroK8s generates a CA without using an FQDN which can lead to certificate errors when connecting. Therefore, below we will re-generate that cluster CA with an FQDN and add the captain node IP address as a backup measure.

Create FreeIPA DNS A record

- Log into FreeIPA webUI as an admin

- Network services > DNS > DNS Zones

- Select the FQDN

- Select “Add” in the top right

- Enter

captaininto Record name - Select

Afor Record Type - Enter k8s IP address into IP Address

- Select “Add”

- Enter

Configure MicroK8s CSR

- SSH into MicroK8s “cluster”

vim /var/snap/microk8s/current/certs/csr.conf.templateand relevant information- Set

CNin[dn]to FQDN of MicroK8s cluster captain - Add

DNS.6and set to FQDN of MicroK8s cluster captain - Add

IP.3and set to IP address of MicroK8s cluster captain

- Save

- Set

- Generate new certificate by restarting MicroK8s:

/snap/bin/microk8s.stop && /snap/bin/microk8s.start curl https://127.0.0.1:16443/healthz -k -v

Setup Kubectl on remote macOS

Kubernetes provides a command line tool for communicating with a Kubernetes cluster’s control plane, using the Kubernetes API. This tool is named kubectl. Below I demonstrate how to generate a config that can be used by the kubectl tool to talk to your microk8s “cluster”.

- SSH into MicroK8s server

microk8s config- Copy the kubectl config generated by MicroK8s

- Logout

- Open terminal on macOS client

brew install kubectlvim ~/.kube/config- Paste output from above

- Set cluster address to FQDN:

sed -i 's#<IP addr of k8s>#<FQDN of k8s>#g' ~/.kube/config kubectl get pods -A

Configure CoreDNS for local DNS zones

If your homelab environment has a DNS server that hosts a local zone then I would recommend setting up CoreDNS to perform a zone transfer of those DNS records. By having CoreDNS serve those DNS records it will reduce the load on your DNS server and avoid containers resolving incorrectly.

kubectl get cm -n kube-system coredns -o=yaml > coredns/coredns_cm.yamlvim coredns/coredns_cm.yaml- Add a

secondaryclause section to the config like the screenshot below or use CoreDNS documentation

kubectl apply -f coredns/coredns_cm.yamlkubectl run -n default -it --rm --tty --restart=Never test --image=alpine ashapk add --update -q bind-toolsdig <FQDN>

Configure persistent storage

This section walks through configuring local-storage to use your MicroK8s instances hard drive for persistence storage or using Synology via iSCI. Storage can be a tricky thing with Kubernetes and is something that should be considered before diving in head first.

My experience

I started to notice that my single-node cluster/captain node was becoming overwhelmed with trying to manage k8s tasks and running services I deployed. For example, in some instances my kubectl would take up to 5 seconds or more to respond when asking for a list of pods. Good k8s practices is to separate master/captain and computing nodes. The captain nodes are solely responsible for running critical k8s services/tasks for the cluster and the computing nodes are responsible for running services like Cyberchef or Gitlab.

Unfortunately, early on in my journey with k8s I created services with persistent storage using local-storage on my single-node “cluster” but more specifically what became my captain node. When you use local-storage as the persistent volume option, it locks services using that volume to that specific node. Next, I started Googling how to migrate persistent storage from one node to another and/or migrating from local-storage to my Synology device. Per my experience and research, moving persistent storage on k8s is not really possible with vanilla k8s. The options that exist are for cloud platforms and/or walk you through some hacky solution. I personally tried these hacky solutions and it caused more headaches and loss of data then success. My recommendation is to move forward under the assumption it’s not possible and that these hacky solutions are really a last resort.

My advice/setup

In my homelab, I use local-storage and Synology for persistent storage. My recommendation would be to use Synology or equivalent persistent service like NFS for “production” services. For example, in my homelab I deem the following services “production” services: Gitlab, Vault secrets store, Prometheus metrics, and Minio (S3 alternative). The guiding questions I use to determine if a service should use Synology for persistent storage are “If I lost the data tomorrow would I be upset?” or “would the down time of this service affect me?”.

For example, I run PiHole on my k8s cluster using Synology as persistent storage. Now if I lost the data curated by PiHole I wouldn’t be upset but if that service goes down my entire home network is affected. Occasionally, I bring down nodes for general maintenance such as applying updates. If PiHole was using local-storage and I brought down the node the volume was sitting on, my home network would not be able to resolve DNS. But if I use Synology, that volume can be used by another node, thus allowing me to safely bring down nodes without interrupting my home network.

Lastly, local-storage is really great for testing or tinkering with the end goal being the data will be deleted. For example, I am currently learning how to create Helm charts and I am trying to build a chart for a common open-source application. As I learn how to do this I am using local-storage as my dumping grounds. In fact, I recently purchased a cheap SSD to increase my local-storage capacity. I ran into an issue that I was doing too much prototyping that I ran out of local-storage on a single-node and thus it was not able to pull and store container images. I also ran into issues that services running on that node were impacted as a result.

Option 1: Configure local storage

- Install

local-pathstorage:kubectl apply -f https://raw.githubusercontent.com/rancher/local-path-provisioner/master/deploy/local-path-storage.yaml kubectl get sc

Option 2: Configure synology-csi driver

Create a user account

The synology-csi driver requires an administrator account on your Synology device. The synology-csi driver uses the administrator account to login via the web API to create the necessary resources (iSCI endpoint and LUN volume).

- Log into the Synology web portal as an administrative user

- Control Panel > Users & Groups

- Select “Create”

- User info

- Enter a username into Name

- Create a description for the user account

- Enter password

- Join groups

- Select “Administrators” group

- Select “Administrators” group

- Assign shared folder permissions

- Leave as default

- Assign user quota

- Leave as default

- Assign application permissions

- Leave as default

- User info

Configure synology-csi driver

git clone https://github.com/CptOfEvilMinions/BlogProjectscd BlogProjects/microk8s/synology-storagecp client-info.yml.example client-info.ymlvim client-info.ymlto set username, password, and host to Synology IP/FQDN

kubectl create ns synology-csikubectl create secret generic client-info-secret -n synology-csi --from-file=./client-info.ymlvim storage-class.ymland set parameters

cp storage-class.yml synology-csi/deploy/kubernetes/v1.20/storage-class.ymlcd ./synology-csi- MicroK8s uses a different directory for kubelet storage. This sed statement will update the k8s deployment to use the proper path:

sed -i '' 's#/var/lib/kubelet#/var/snap/microk8s/common/var/lib/kubelet#g' deploy/kubernetes/v1.20/node.yml

Install synology-csi driver

- Install the synology-csi driver:

./scripts/deploy.sh install --basic

kubectl get sc

Test synology-csi driver

cd ..kubectl apply -f pvc-test.yamlkubectl get pvc

kubectl delete -f pvc-test.yaml

Deploy Traefik, cert-manager, and Cyberchef

Why Traefik?

The reason is simple, it has a pretty UI that provides insight into the current state/configuration of ingress routes. Choosing to use Traefik over the default ingress controller was a decision I made after several failed attempts to deploy a web application and wanting to use a familiar tool – I used Traefik in my homelab Docker environment. All the k8s wizards around the world are probably saying just run this kubectl command but in the beginning I didn’t know better. Kubernetes has a lot of moving parts and when you try to follow a blog post and it fails, it’s hard to know why OR how to debug it in the beginning. Traefik provided a way for me to confirm that at least the ingress route at a minimum was configured correctly – I needed a tool to reach me at my current skill level to help me understand if I was on the right track or not. I was also unaware at the time that the default Kubernetes dashboard would provide the same insight as Traefik.

In retrospect, after working with Kubernetes for about 6 months I can say the experience of using Traefik has been bittersweet. By default almost all Helm charts and/or services you can deploy on k8s will work with the default NGINX ingress controller. I felt in some instances it was harder to modify some Helm charts to use Traefik. Thankfully, if Helm chart authors use good practices this isn’t an issue but I have experienced it. Lastly, I will let the readers know that I am actively transitioning from Traefik to NGINX in my homelab. Again, Traefik is an amazing reverse proxy and one that I adored as a staple in my Docker setup for years but for K8s it doesn’t have that same charm.

This experience demonstrates the beauty/ugliness of k8s. The beauty is that you aren’t locked into a single technology for different components of your k8s cluster. However, the ugliness can be that when forging a path with a particular service you may need to become a subject matter expert (SME) for that component. I personally, learned a MEGATON when I chose to use Traefik over Nginx. It was on me to learn how to override Helm charts and how to retrofit services to use Traefik. The journey was painful at times but I came out the other side with more knowledge.

Cert-manager

Configure cert-manager

cert-manager is a great framework that takes the hassle out of creating, rotating, and managing TLS certificates for your MicroK8s cluster. In this blog post, we are going to keep it simple and generate a self-signed root CA that is generated on the fly. We will use this root CA to generate leaf certificates for our services. In future blog posts, I will demonstrate how to use Vault in this process. The YAML config below generates a root CA and then configures cert-manager to use CA to generate subsequent certificates for services. The second YAML config blob uses cert-manager to generate a root CA (isCA: true), with a common-name of hackinglab-kubes-ca which should be changed to match your local DNS zone, and lastly we inform cert-manager to use this rootCA cluster wide for issuing certificates. The first YAML config blob sets up the a k8s resource of ClusterIssuer which allows other resources to call upon when they need to generate a certificate.

cd BlogProjects/microk8s/cert-managervim root-ca.yaml

Generate root CA

cd cert-managercp cert-manager/root-ca.yaml.example cert-manager/root-ca.yamlvim root-ca.ymland set values for your env like this

kubectl apply -f root-ca.yaml

- Export root CA:

kubectl get secrets -n cert-manager root-secret -o jsonpath="{.data.ca\.crt}" | base64 --decode > k8s-root-ca.crt - OPTIONAL: Import root CA into browser to instruct the browser to trust the cert

Deploy cert-manager

kubectl create namespace cert-manager- Add cert-manager helm repo:

helm repo add jetstack https://charts.jetstack.io helm repo update- Install cert-manager:

helm install cert-manager jetstack/cert-manager -n cert-manager --set installCRDs=true

Traefik

The Traefik configuration is pretty standard with nothing special. At a high level, the config instructs Helm to spin up three instances of Traefik so that we can have redundancy for load balancing HTTP/HTTPs requests in our homelab. We apply security measures by restricting the type of things the Traefik service can or cannot do.

Deploy Traefik

kubectl create ns traefikhelm install traefik traefik/traefik -n traefik -f traefik-values.yaml

Set FreeIPA DNS A record for Traefik

- Get external IP address for load balancer

kubectl get svc -n traefik

- Log into FreeIPA

- Network Services > DNS > DNS Zones > <DNS Zone>

- Select “Add”

- Enter

traefikfor record name - Select

Afor record type - Enter the

<Traefik load balancer external IP addr>for IP address

- Select “Add”

- Enter

- Open a terminal

dig <Traefik FQDN> @<FreeIPA DNS server>

Expose the Traefik dashboard with a Traefik ingress point with a signed-cert

vim dashboard-ingressroute.yamlto values to match your environment

kubectl apply -f dashboard-ingressroute.yaml

- Open a web browser to

https://<traefik FQDN>/dashboard/

Where are my ports?!?!!?

Kubernetes can sometimes feel like voodoo dark magic. For example, let’s take our Traefik ingress controller which is listening for network connections on port 443. If you run kubectl get svc -n traefik you will see Traefik with a service type of LoadBalancer , a Cluster-IP of 10.152.183.244 (highlighted in green) an External-IP from your pfSense DHCP pool (10.150.100.50) and listening on ports 80 (HTTP) and 443 (HTTPS) externally (highlighted in red). Now if you run the following command: netstat tnlp | grep 443 it doesn’t return a listening service on that port 443 (screenshot below)?!?!?!

Let’s take a moment to demystify this voodoo dark magic performed by Kubernetes + IPtables using the screenshot above. Strap in it’s a doozy! Kubernetes performs network routing using IPtables. The IPTables PREROUTING chain is configured to accept all incoming traffic and forward it to the KUBE-SERVICE rule/chain (first blue box). For context, the KUBE-SERVICE chain contains a list of rules for all the k8s services (svc). If we follow this chain and filter for Traefik based rules, IPtables should return two rules related to routing HTTP (KUBE-EXT-UIRTXPNS5NKAPNTY) and HTTPS (KUBE-EXT-LODJXQNF3DWSNB7B) traffic. The second blue box with the connecting dotted red line is demonstrating that we have found the corresponding IPtables rules because the destination IP address matches the service load balancers external IP address.

For this example, we are going to select the HTTPS based rule (KUBE-EXT-LODJXQNF3DWSNB7B – third blue box) and follow it’s IPtable’s chain. This chain presents us with another rule (KUBE-SVC-LODJXQNF3DWSNB7B) which based on the name will point towards the k8s svc which has a cluster IP of 10.152.183.244. The end of this chain is an IPtables rule that has a destination IP address that matches our load balancer’s service ClusterIP (highlighted in green). At a high level, this rule will actually perform the load balancing by distributing the load of incoming data over the rules following it (green arrows).

The goal of this section was to demonstrate that using traditional methods of debugging could be a red herring with Kubernetes but that also those fundamentals can be useful. I know this statement contradicts itself so let me elaborate. On a traditional Linux instance, if you have a service and you are not able to access it, one debugging technique is to see if it’s listening and if so on the right port/interface. As demonstrated above, this technique can not be used and led me down a rabbit hole. However, when I finally got on the right track, my fundamental understanding of IPtables was very helpful in understanding the maze of Kuberenetes routing with IPtables.

Deploy Cyberchef

To deploy Cyberchef I created a k8s manifest file. Breaking down every attribute of this manifest is outside the scope of the blog post. However, at a high level the manifest uses all the services we deployed. First, it starts by generating a leaf certificate that is signed by the root CA used by cert-manager. Additionally, we instruct cert-manager to generate a certificate that is good for 3 months and that it should be rotated a week before it expires. We also specify the common name in the certificate for our service which in this case is cyberchef.hackinglab.kubes.

Second, we define a Traefik ingress route that will use the HTTPS and monitor for requests coming in that contain the host header cyberchef.hackinglab.kubes. If a request coming into Traefik contains this host header it will forward the request to the cyberchef k8s service. Lastly, we define a k8s service + pod resource that work together to run the cyberchef application and to ensure that at least one instance is always running.

- A Simple CA Setup with Kubernetes Cert Manager

- Youtube: [ Kube 101.2 ] Traefik v2 | Part 2 | Creating IngressRoutes

kubectl create ns cyberchefkubectl apply -f cyberchef.yaml

- Create a DNS CNAME record that points at the Traefik record we create earlier

- Open a web browser to

https://cyberchef.<domain>

Deploy Grafana and Prometheus

Deploy Grafana and Prometheus we need to use all the components created throughout this blog post. Prometheus will use the default storage class to provision space to store metrics and Grafana will be load balanced by a signed TLS certificate behind Traefik. Additionally, Grafana and Prometheus provide insights into the health and state of your Microk8s “cluster”.

kubectl create ns metrics- Generate a random password for the Grafana admin:

kubectl create secret generic grafana-admin -n metrics --from-literal=admin-user=admin --from-literal=admin-password=$(openssl rand -base64 32 | tr -cd '[:alnum:]') - Add Helm repo:

helm repo add prometheus-community https://prometheus-community.github.io/helm-charts - Install Prometheus and Grafana with Helm:

helm install prometheus prometheus-community/kube-prometheus-stack -n metrics -f values.yaml

- Create TLS cert for Grafana and Traefik ingress route:

kubectl apply -f ingress.yaml kubectl get pvc -n metrics

kubectl get pods -n metrics

- Open browser to

https://grafana:<domain> - Login

- Username:

admin - Password:

kubectl get secret --namespace metrics grafana-admin -o jsonpath="{.data.admin-password}" | base64 --decode ; echo

- Username:

- Dashboards > Kubernetes / Compute Resources / Pod

Areas for exploration

Unfortunately, I can’t cover all the things in this blog post. Therefore, this section will be devoted to additional areas that could be investigated for your homelab environment as part of your Act 3 and 4.

Debugging: kubectl describe/kubectl log/kubectl exec

kubectl describe, kubectl log, kubectl exec are the three commands I use the most when attempting to diagnose an issue on k8s. Typically, when a pod is failing to even start I use kubectl describe at first to determine the issue. If it’s a simple issue like you specified the wrong secret name that error will be provided. This command can surface other issues like unable to pull a container imager, trouble mounting a volume, and other issues of the like.

Once a pod has started but it fails to continue running is when I use kubectl log. This command will dump the stdout and stderr generated by the pod/service. Lastly, as a last resort I will use kubectl exec to start a shell in the container. This method will have varying success. For example, scratch containers don’t have a shell, the only thing the container has is the application itself so this method can not be used. I have found that typically containers will have a scratch version and alpine/ubuntu based. You can update the Helm chart to use an alternative version which should allow starting a shell. Another reason mileage may vary with this method is that if a container is consistently restarting really fast you will create a shell for it to be terminated quickly. I don’t really have a good solution for this other than instead of starting a shell, run a single command and hope you get lucky.

Teleport/”SSO” login

Setting up SSO login for your Kubernetes cluster is not an easy task and the available options are specific to each environment. One of the common options is to set up Dex to provide the capability to log into Kubernetes via kubectl using OpenID. However, the kubectl config needs to contain the client secret for the OpenID connector. IMO, in a homelab the risk of storing this secret is pretty minimal but in an enterprise environment it’s not ideal. Therefore, I opted to skip demonstrating how to set this up as a viable option.

One option that negates this issue is using Teleport which supports k8s. Unfortunately, the Teleport community edition license doesn’t allow an SSO/OpenID platform that is not Github, I personally use Keycloak in my Homelab env. That being said, Teleport does allow me to create local user accounts that I can use to grant access to my homelab k8s cluster. Additionally, Teleport by design is short lived tokens for all the things which is a bonus from a security perspective. Lastly, Teleport supports WebAuthN, more specifically Yubikey, which means a user will need to perform this process to access my k8s homelab “cluster”.

External-dns

As we observed above, K8s really does provide a platform that automates a ton of actions when spinning up a service. However, out of the box, there is no method to configure DNS records if your homelab environment has a DNS sever with a local zone, like hackinglab.kubes. External-dns provides this capability in an automated fashion. I was unable to get it working with Traefik but by default it works with FreeIPA using the rfc2136 method and NGINX as an ingress controller.

Lessons learned

My initial thoughts on Kubernetes is that it is a super powerful tool and I am thoroughly enjoying migrating my Docker setup to k8s. However, when the simplest of things go wrong with k8s while your trying to learn you’re can spend countless hours in the wrong direction. While this has happened to me several times, I have found that with each debug session I come out learning more about k8s. So while this may be the typical process to learn something, I felt it was significantly more painful and demotivating with k8s. The only thing I can offer to newcomers is that you’re not alone and to keep at it. For example, I spent an entire week trying to determine why I wasn’t able to reach a resource in my local network via an FQDN. After hours of debugging and attempting all the StackOverFlow posts, I found out that it was a DNS issue.

Traditional debugging methods only work sometimes

In the example above with netstat I demonstrated that Traefik was listening for incoming traffic but no open ports on the host. Kubernetes is really fascinating in that it abstracts all nuances of networking, DNS, storage, secret management, container deployment, etc from the user. This abstraction WHEN IT WORKS, lowers the bar for engineers to focus on more important things, such as writing code. However, when these abstracts break, it can be really hard for beginners to know WHERE to begin or how to triage an issue. Additionally, a lot of these abstractions are just layers around pre-existing technology (DNS, networking, containerd) and having an understanding of them is useful, it can also be a red herring.

In fact, traditional methods of debugging an issue on K8s have pigeon holed my thinking when debugging the problem. If you want to truly embrace Kubernetes it’s good to understand the fundamental technologies that are used but also learn how to leverage Kuberenetes tools when debugging.

Docker Swarm vs. Kubernetes for homelab

If you peruse my old blog posts you will see that I almost always exclusively used Docker for deploying services. When it comes to prototyping and needing a quick software stack, you can’t beat the simplicity AND speed of Docker. It’s also extremely portable and it’s a well known tool by the larger community. The real driving force for me porting my Docker setup to Kubernetes was cert-manager and to learn Kuberenetes. In my homelab, I run my own CA which means all my services use a signed certificate. However, Docker doesn’t provide a good mechanism to automatically rotate these certificates. Thus, like every lazy admin my certificates would go stale for services and I would just tell Chrome to ignore the security issue. I even had “scripts” to automate generating certificates but I didn’t maintain them well so when it came time to generate a new certificate it felt like such a chore. So I almost got to a point of “why run a root CA?”.

Thankfully, cert-manager came to the rescue and was the shining beacon of hope for me. Cert-manager will handle the generation and rotation of certificates for all of my services. Thus allowing me to run my own root CA with purpose again. I also set up external-dns to automate creating DNS records for new services. Capabilities and automation like this helped me ultimately learn Kubernetes which brings me to my epiphany on k8s in a homelab. I personally believe migrating to Kubernetes from Docker Swarm for your homelab is ONLY worth it if you want to learn Kubernetes. As I stated above, Docker really makes it easy to spin up a service and it will continue to run it in that state happily till the end of time.

New skills/knowledge

- Learned Kubernetes

- Learned how to deploy services using k8s manifests and Helm

- Learned cert-manger

- Learned traefik

- Learned how to manage persistent storage

- Learned how debug k8s issues

- Learned how to monitor my cluster with Grafana/Prometheus

Challenges

- Debugging k8s issues

Future improvements

- Setup OIDC login via kubectl using a provider such as Github or Keycloak

References

- Install a local Kubernetes with MicroK8s

- An overview of MicroK8s (a tool to quick-start a Kubernetes cluster) and why using it in the cloud was a terrible idea

- The Ultimate Guide to the Kubernetes Dashboard: How to Install, Access, Authenticate and Add Heapster Metrics

- Access a port of a pod locally

- Add-on: dashboard

- SCP Remote to Local

- Cluster Access

- Managing Secrets using kubectl

- Working with kubectl

- Traefik helm

- MicroK8s documentation – home

- Kubernetes for the Absolute Beginners – Hands-on

- SynologyOpenSource/synology-csi

- google/cadvisor

- kube-prometheus-stack

- How to Log in to the Microk8s Kubernetes Dashboard: A Guide for Data Scientists

- Getting started with Kubernetes: kubectl and microk8s on Ubuntu

- Ingresses and Load Balancers in Kubernetes with MetalLB and nginx-ingress

- CoreDNS – secondary

- Performing DNS Zone Transfers & Viewing the Results w/ Aquatone

- How to save the LDAP SSL Certificate from OpenSSL

- Breaking down and fixing Kubernetes

- Understanding networking in Kubernetes

- IPTABLES

- krew installation