Goals

- Setup Graylog stack with Docker

- Setup Graylog with Ansible

- Setup Graylog stack with Vagrant

- Setup Graylog with manual instructions

- Test Graylog with Python script

- Ingest AuditD logs into Graylog with Auditbeat

- Ingest Osquery logs into Graylog with Winlogbeat

Update log

- July 15th 2021 – Updated Docker and Ansible playbooks from Graylog v4.0 to v4.1

- July 15th 2021 – Updated Docker and Ansible playbooks from Elasticsearch v7.10 to v7.13

- August 30th 2021 – Added Vagrantfile for Graylog

- October 24th 2021 – Updated Docker and Ansible playbooks from Graylog v4.1 to v4.2

- December 30th 2021 – Updated Docker and Ansible playbooks from Graylog v4.2 to v4.2.4

- This update mitigates log4j vulnerability

Background

What is Graylog?

Graylog is a leading centralized log management solution built to open standards for capturing, storing, and enabling real-time analysis of terabytes of machine data. Purpose-built for modern log analytics, Graylog removes complexity from data exploration, compliance audits, and threat hunting so you can quickly and easily find meaning in data and take action faster.

What is AuditD?

The Linux Auditing System is a native feature to the Linux kernel that collects certain types of system activity to facilitate incident investigation. The Linux Auditing subsystem is capable of monitoring three distinct items:

- System calls: See which system calls were called, along with contextual information like the arguments passed to it, user information, and more.

- File access: This is an alternative way to monitor file access activity, rather than directly monitoring the

open system call and related calls. - Select, pre-configured auditable events within the kernel: Red Hat maintains a list of these types of events.

What is Auditbeat?

Collect your Linux audit framework data and monitor the integrity of your files. Auditbeat ships these events in real time to the rest of the Elastic Stack for further analysis.

What is Osquery?

Osquery exposes an operating system as a high-performance relational database. This allows you to write SQL-based queries to explore operating system data. With osquery, SQL tables represent abstract concepts such as running processes, loaded kernel modules, open network connections, browser plugins, hardware events or file hashes.

What is Filebeat?

Filebeat is a lightweight shipper for forwarding and centralizing log data. Installed as an agent on your servers, Filebeat monitors the log files or locations that you specify, collects log events, and forwards them to Logstash for indexing.

Network diagram

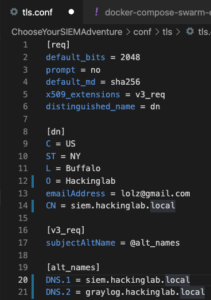

Generate OpenSSL public certificate and private key

git clone https://github.com/CptOfEvilMinions/ChooseYourSIEMAdventurecd ChooseYourSIEMAdventurevim conf/tls/tls.confand set:- Set the location information under

[dn]C– Set CountryST– Set stateL– Set cityO– Enter organization nameemailAddress– Enter a valid e-mail for your org

- Replace

example.comin all fields with your domain - For alt names list all the valid DNS records for this cert

- Save and exit

- Set the location information under

openssl req -x509 -new -nodes -keyout conf/tls/tls.key -out conf/tls/tls.crt -config conf/tls/tls.conf- Generate TLS private key and public certificate

Install Graylog with Docker-compose v2.x

WARNING

The Docker-compose v2.x setup is for development use ONLY. The setup contains hard-coded credentials in configs and environment variables. For a more secure Docker deployment please skip to the next section to use Docker Swarm which implements Docker secrets.

WARNING

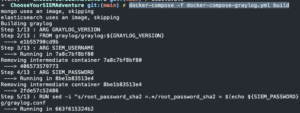

Spin up stack

-

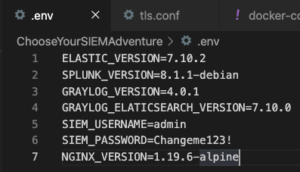

git clone https://github.com/CptOfEvilMinions/ChooseYourSIEMAdventurecd ChooseYourSIEMAdventurevim .envand set:GRAYLOG_VERSION– OPTIONAL – Set the version of Graylog you want to useGRAYLOG_ELATICSEARCH_VERSION– OPTIONAL – Set the version of Elasticsearch to use with GraylogSIEM_USERNAME– LEAVE AS DEFAULT this cannot be modifiedSIEM_PASSWORD– Set the admin password for GraylogNGINX_VERSION– OPTIONAL – Set the version of NGINX you want to use

- Save and exit

sed -i '' "s/GRAYLOG_PASSWORD_SECRET=.*/GRAYLOG_PASSWORD_SECRET=$(openssl rand -base64 32)/g" docker-compose-graylog.yml- Set

GRAYLOG_PASSWORD_SECRETto a random value

- Set

echo $(cat .env | grep SIEM_PASSWORD | awk -F'[/=]' '{print $2}') | tr -d '\n' | openssl sha256 | cut -d" " -f2 | xargs -I '{}' sed -i '' 's/GRAYLOG_ROOT_PASSWORD_SHA2=.*/GRAYLOG_ROOT_PASSWORD_SHA2={}/g' docker-compose-graylog.yml- Based on the

SIEM_PASSWORDdefined in.env, this command will generate a SHA256 of that password. Next, it will set the Graylog environment variable(GRAYLOG_ROOT_PASSWORD_SHA2) to the SHA256 hash.

- Based on the

docker-compose -f docker-compose-graylog.yml build

docker-compose -f docker-compose-graylog.yml up

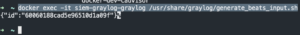

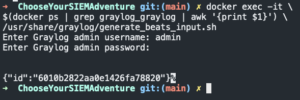

docker exec -it siem-graylog-graylog /usr/share/graylog/generate_beats_input.sh- Create Beats input

- Create Beats input

Install Graylog with Docker-compose v3.x (Swarm)

Unfortunately, Docker-compose version 3.X (DockerSwarm) doesn’t interact with the .env file the same as v2.X. The trade-off is a more secure deployment of the Graylog stack because secrets are not hardcoded in configs or stored in environment variables. Below we create Docker secrets that will contain these sensitive secrets to be used by the Docker containers.

Furthermore, any changes such as changing the container version or the Mongo database name in the .env file will have no effect. These settings need to be changed in the docker-compose-swarn-graylog.yml file. Lastly, another benefit of Docker Swarm is we can have multiple instances (replicas) of Graylog running for high-availability.

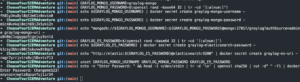

Generate secrets

git clone https://github.com/CptOfEvilMinions/ChooseYourSIEMAdventurecd ChooseYourSIEMAdventureGRAYLOG_MONGO_USERNAME=graylog-mongo- Set Mongo username for Graylog

GRAYLOG_MONGO_PASSWORD=$(openssl rand -base64 32 | tr -cd '[:alnum:]')- Generate Mongo password for Graylog user

echo ${GRAYLOG_MONGO_USERNAME} | docker secret create graylog-mongo-username -- Create Docker secret with Mongo username

echo ${GRAYLOG_MONGO_PASSWORD} | docker secret create graylog-mongo-password -- Create Docker secret with Mongo password

echo "mongodb://${GRAYLOG_MONGO_USERNAME}:${GRAYLOG_MONGO_PASSWORD}@mongo:27017/graylog?authSource=admin&authMechanism=SCRAM-SHA-1" | docker secret create graylog-mongo-uri -- Generate Mongo URI string for Graylog containing credentials

GRAYLOG_ES_PASSWORD=$(openssl rand -base64 32 | tr -cd '[:alnum:]')- Generate Elasticsearch pasword

echo ${GRAYLOG_ES_PASSWORD} | docker secret create graylog-elasticsearch-password -- Create Docker secret with Elasticsearch password

openssl rand -base64 32 | tr -cd '[:alnum:]' | docker secret graylog-password-secret -- Generate graylog-password-secret

echo "http://elastic:${GRAYLOG_ES_PASSWORD}@elasticsearch:9200" | docker secret create graylog-es-uri -- Generate Elasticsearch URI string for Graylog containing credentials

unset GRAYLOG_MONGO_USERNAME GRAYLOG_MONGO_PASSWORD GRAYLOG_ES_PASSWORD- Unset environment variables

echo -n "Enter Password: " && head -1 </dev/stdin | tr -d '\n' | openssl sha256 | cut -d" " -f2 | docker secret create graylog-root-password-sha2 -- Generate SHA256 hash of admin password for Graylog

- Generate SHA256 hash of admin password for Graylog

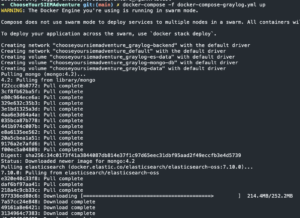

Spin up stack

docker stack deploy -c docker-compose-swarm-graylog.yml graylog

docker service logs -f graylog_graylog- Monitor Graylog logs

- Wait for

INFO : org.graylog2.bootstrap.ServerBootstrap - Graylog server up and running.

docker exec -it $(docker ps | grep graylog_graylog | awk '{print $1}') /usr/share/graylog/generate_beats_input.sh- Create Beats input

- Enter admin for username

- Enter <graylog admin password>

Install Graylog on Ubuntu 20.04 with Ansible

WARNING

This Ansible playbook will allocate half of the systems memory to Elasticsearch. For example, if a machine has 16GBs of memory, 8GBs of memory will be allocated to Elasticsearch.

WARNING

Init playbook

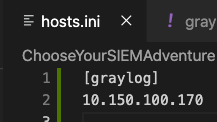

vim hosts.iniadd IP of Elastic server under[graylog]

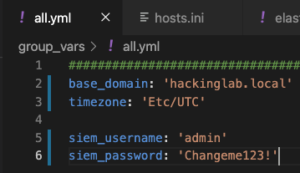

vim group_vars/all.ymland set:base_domain– Set the domain where the server residestimezone– OPTIONAL – The default timezone is UTC+0siem_username– Ignore this settingsiem_password– Ignore this setting

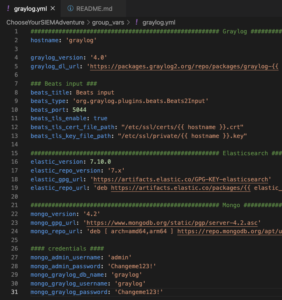

vim group_vars/graylog.ymland set:hostname– Set the desired hostname for the servergraylog_version– Set the desired version of Graylog to usebeats_port– OPTIONAL – Set the port to ingest logs using BEAT clientselastic_version– OPTIONAL – Set the desired version of Elasticsearch to use with Graylog – best to leave as defaultelastic_repo_version– Change the repo version to install the Elastic stack –

mongo_version– OPTIONAL – Set the desired version of Mongo to use with Graylog – best to leave as defaultmongo_admin_username– OPTIONAL – Set Mongo admin username – best to leave as defaultmongo_admin_password– Set the Mongo admin user passwordmongo_graylog_username– Set Mongo username for Graylog usermongo_graylog_password– Set Mongo password for Graylog user

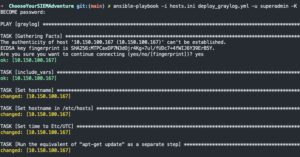

Run playbook

ansible-playbook -i hosts.ini deploy_graylog.yml -u <username> -K- Enter password

- Enter password

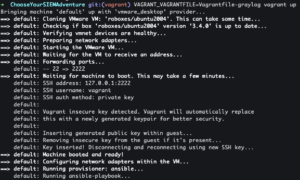

Install Graylog with Vagrant

git clone https://github.com/CptOfEvilMinions/ChooseYourSIEMAdventurecd ChooseYourSIEMAdventureVAGRANT_VAGRANTFILE=Vagrantfile-graylog vagrant up- Spin up VM with Graylog

- Spin up VM with Graylog

Manual install of Graylog on Ubuntu 20.04

WARNING

These manual instructions will allocate half of the systems memory to Elasticsearch. For example, if a machine has 16GBs of memory, 8GBs of memory will be allocated to Elasticsearch.

WARNING

Init host

sudo sutimedatectl set-timezone Etc/UTC- Set the system timezone to UTC +0

apt update -y && apt upgrade -y && rebootapt install apt-transport-https openjdk-8-jre-headless uuid-runtime pwgen gnupg -y- Install Java and necessary tools

Install/Setup Mongo

wget -qO - https://www.mongodb.org/static/pgp/server-4.2.asc | apt-key add -- Add Mongo GPG key

echo "deb [ arch=amd64,arm64 ] https://repo.mongodb.org/apt/ubuntu bionic/mongodb-org/4.2 multiverse" | sudo tee /etc/apt/sources.list.d/mongodb-org-4.2.list- Add Mongo repo

apt-get update -y && apt-get install -y mongodb-org- Install mongo

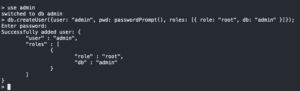

systemctl start mongodsystemctl enable mongodmongo --port 27017- Enter Mongo shell

use admin- Create admin user

db.createUser({user: "admin", pwd: passwordPrompt(), roles: [{ role: "root", db: "admin" }]});- Enter password

- Create Graylog database and user

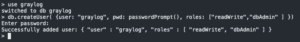

use graylog- Create database

db.createUser( {user: "graylog", pwd: passwordPrompt(), roles: ["readWrite","dbAdmin" ] })- Create

grayloguser - Enter password

- Create

exitsed -i "s/#security:/security:\n authorization: enabled/g" /etc/mongod.conf- Enable authentication on Mongo

systemctl restart mongod

Install/Setup Elasticsearch

wget -qO - https://artifacts.elastic.co/GPG-KEY-elasticsearch | sudo apt-key add -- Add Elastic GPG key

echo "deb https://artifacts.elastic.co/packages/7.x/apt stable main" | sudo tee /etc/apt/sources.list.d/elastic-7.x.list- Add Elastic repo

apt-get update && sudo apt-get install elasticsearch -y- Install Elasticsearch

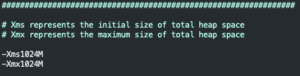

sed -i "s#-Xms1g#-Xms$(echo $(( $(dmidecode -t 17 | grep 'Size: ' | awk '{print $2}') / 2 ))"M")#g" /etc/elasticsearch/jvm.options- Setting the maximum size of the total heap size for Elasticsearch

sed -i "s#-Xmx1g#-Xmx$(echo $(( $(dmidecode -t 17 | grep 'Size: ' | awk '{print $2}') / 2 ))"M")#g" /etc/elasticsearch/jvm.options- Setting the initial size of the total heap size for Elasticsearch

- Setting the initial size of the total heap size for Elasticsearch

echo 'xpack.security.enabled: true' >> /etc/elasticsearch/elasticsearch.yml- Enable X-Pack security

echo 'xpack.security.transport.ssl.enabled: true' >> /etc/elasticsearch/elasticsearch.yml- Enable X-Pack security transport SSL

echo 'discovery.type: single-node' >> /etc/elasticsearch/elasticsearch.yml- Set to a single-node mode

sed -i 's/#cluster.name: my-application/cluster.name: graylog/g' /etc/elasticsearch/elasticsearch.yml- Set cluster name

echo 'action.auto_create_index: .watches,.triggered_watches,.watcher-history-*' >> /etc/elasticsearch/elasticsearch.yml- Allow Graylog to control creating indexes

systemctl start elasticsearchsystemctl enable elasticsearchyes | /usr/share/elasticsearch/bin/elasticsearch-setup-passwords -s auto | grep 'PASSWORD' > /tmp/elasticsearch-setup-passwords.txt-

Generate Elasticsearch passwords

-

cat /tmp/elasticsearch-setup-passwords.txt- Print contents

- Print contents

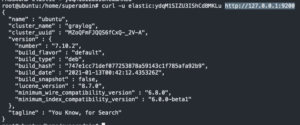

curl -u elastic:<Elastic password> http://127.0.0.1:9200

Create Graylog user on Elasticsearch

elastic_es_password=$(cat /tmp/elasticsearch-setup-passwords.txt | grep 'PASSWORD elastic' | awk '{print $4}')- Extract Elastic password

curl -u elastic:$elastic_es_password -X POST http://localhost:9200/_xpack/security/role/graylog -H 'Content-Type: application/json' -d '{"cluster": ["manage_index_templates", "monitor", "manage_ilm"], "indices": [{ "names": [ "*" ], "privileges": ["write","create","delete","create_index","manage","manage_ilm","read","view_index_metadata"]}]}'- Create Graylog role

echo "PASSWORD graylog = $(openssl rand -base64 32 | tr -cd '[:alnum:]')" >> /tmp/elasticsearch-setup-passwords.txt- Create Graylog password for Elastic and add it to temporary credentials file

graylog_es_password=$(cat /tmp/elasticsearch-setup-passwords.txt | grep 'PASSWORD graylog' | awk '{print $4}')- Extract Graylog password

curl -u elastic:$elastic_es_password -X POST http://localhost:9200/_security/user/graylog -H 'Content-Type: application/json' -d "{ \"password\" : \"$graylog_es_password\", \"roles\" : [ \"graylog\" ], \"full_name\" : \"Graylog system account\", \"email\" : \"graylog_system@local\"}"- Create Graylog user

Install/Setup Graylog

cd /tmp && wget https://packages.graylog2.org/repo/packages/graylog-4.2-repository_latest.deb- Download Graylog repo

dpkg -i graylog-4.0-repository_latest.deb- Install Graylog repo

apt update -y && apt install graylog-server graylog-integrations-plugins -y- Install Graylog

echo -n "Enter Password: " && head -1 </dev/stdin | tr -d '\n' | openssl sha256 | cut -d" " -f2 | xargs -I '{}' sed -i "s/root_password_sha2 =.*/root_password_sha2 = {}/g" /etc/graylog/server/server.conf- Enter admin password for Graylog

- Generate SHA256 hash of password and set

root_password_sha2

sed -i "s/password_secret =.*/password_secret =$(pwgen -N 1 -s 96)/g" /etc/graylog/server/server.conf- Generate and set

password_secret - Create Graylog role

- Generate and set

sed -i "s#mongodb_uri =.*#mongodb_uri = mongodb://graylog:<Graylog Mongo password>@localhost:27017/graylog#g" /etc/graylog/server/server.conf- Set Mongo URI with Graylog Mongo username and password

sed -i 's|^#elasticsearch_hosts =.*|elasticsearch_hosts = http://<graylog_sys_username>:<graylog_sys_password>@localhost:9200|g' /etc/graylog/server/server.conf- Set Elasticsearch URI with Graylog ES username and password

systemctl restart graylog-serversystemctl enable graylog-server

Install/Setup NGINX

apt install nginx -y- Install nginx

wget https://raw.githubusercontent.com/CptOfEvilMinions/ChooseYourSIEMAdventure/main/conf/ansible/nginx/nginx.conf -O /etc/nginx/nginx.conf- Download NGINX.conf

wget https://raw.githubusercontent.com/CptOfEvilMinions/ChooseYourSIEMAdventure/main/conf/ansible/nginx/graylog.conf -O /etc/nginx/conf.d/graylog.conf- Download Graylog config for NGINX

wget https://raw.githubusercontent.com/CptOfEvilMinions/ChooseYourSIEMAdventure/main/conf/tls/tls.conf -O /etc/ssl/tls.cnf- Download OpenSSL config

openssl req -x509 -new -nodes -keyout /etc/ssl/private/nginx.key -out /etc/ssl/certs/nginx.crt -config /etc/ssl/tls.cnf- Generate private key and public certificate for NGINX

systemctl restart nginxsystemctl enable nginx

Setup UFW

ufw allow OpenSSHufw allow 5044/tcpufw allow 'NGINX HTTP'ufw allow 'NGINX HTTPS'ufw enable

Create BEATs input

- SSH into Graylog server

mkdir -p /etc/graylog/tlsopenssl req -x509 -new -nodes -keyout /etc/graylog/tls/graylog.key -out /etc/graylog/tls/graylog.crt -config /etc/ssl/tls.cnf- Generate private key and public certificate for Graylog

chown graylog:graylog /etc/graylog/tls/graylog.crt /etc/graylog/tls/graylog.keychmod 600 /etc/graylog/tls/graylog.keychmod 644 /etc/graylog/tls/graylog.crt- Set the proper permissions for private key and public certificate for Graylog

cd /tmp && wget https://raw.githubusercontent.com/CptOfEvilMinions/ChooseYourSIEMAdventure/main/conf/docker/graylog/generate_beats_input_docker_swarm.sh -O /tmp/generate_beats_input_docker_swarm.shchmod +x generate_beats_input_docker_swarm.sh- sed -i ‘s#/usr/share/graylog#/etc/graylog#g’ generate_beats_input_docker_swarm.sh

./generate_beats_input_docker_swarm.sh- Enter username

- Enter password

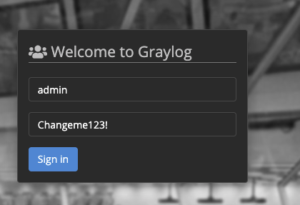

Login into Graylog WebGUI

- Open a browser to

https://<IP addr of Graylog>:<Docker port is 8443, Ansible port is 443>- Enter

adminfor username - Enter

<graylog admin password>for password

- Select “Sign-in”

- Enter

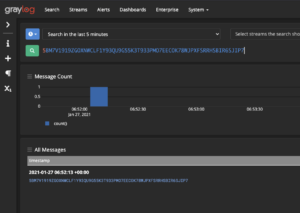

Test Graylog pipeline

cd ChooseYourSIEMAdventure/pipeline_testersvirtualenv -p python3 venvpip3 install -r requirements.txtpython3 beats_input_test.py --platform graylog --host <IP addr of Graylog> --api_port <Ansible - 443, Docker - 8443> --ingest_port <Logstash port - default 5044> --siem_username admin --siem_password <Graylog admin password>

- Login into Graylog

- Select “Search” at the top

- Enter <random message> into search

Ingest Osquery logs with Winlogbeat on Windows 10

Install/Setup Osquery v4.6.0 on Windows 10

- Login into Windows

- Open Powershell as an Administrator

cd $ENV:TEMP- Cd to user’s temp directory

Invoke-WebRequest -Uri https://pkg.osquery.io/windows/osquery-4.6.0.msi -OutFile osquery-4.6.0.msi -MaximumRedirection 3- Download Osquery

Start-Process $ENV:TEMP\osquery-4.6.0.msi -ArgumentList '/quiet' -Wait- Install Osquery

- Invoke-WebRequest -Uri https://raw.githubusercontent.com/CptOfEvilMinions/ChooseYourSIEMAdventure/main/conf/osquery/windows-osquery.flags -OutFile ‘C:\Program Files\osquery\osquery.flags’

- Download Osquery.flags config

Invoke-WebRequest -Uri https://raw.githubusercontent.com/CptOfEvilMinions/ChooseYourSIEMAdventure/main/conf/osquery/windows-osquery.conf -OutFile 'C:\Program Files\osquery\osquery.conf'- Download Osquery.conf config

Restart-Service osqueryd

Install/Setup Filebeat on Windows 10

- Login into Windows

- Open Powershell as an Administrator

cd $ENV:TEMP- Cd to user’s temp directory

$ProgressPreference = 'SilentlyContinue'Invoke-WebRequest -Uri https://artifacts.elastic.co/downloads/beats/filebeat/filebeat-7.10.0-windows-x86_64.zip -OutFile filebeat-7.10.0-windows-x86_64.zip -MaximumRedirection 3- Download Filebeat

Expand-Archive .\filebeat-7.10.0-windows-x86_64.zip -DestinationPath .- Unzip Filebeat

mv .\filebeat-7.10.0-windows-x86_64 'C:\Program Files\filebeat'- Move Filebeat to the Program Files directory Move

cd 'C:\Program Files\filebeat\'- Enter Filebeat directory

Invoke-Webrequest https://raw.githubusercontent.com/CptOfEvilMinions/ChooseYourSIEMAdventure/main/conf/filebeat/windows-filebeat.yml- Using your favorite text editor open

C:\Program Files\filebeat\filebeat.yml- Open the document from the command line with Visual Studio Code:

code .\filebeat.yml - Open the document from the command line with Notepad:

notepad.exe.\filebeat.yml

- Open the document from the command line with Visual Studio Code:

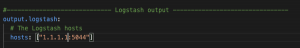

- Scroll down to the

output.logstash:- Replace

logstash_ip_addrwith the IP address of FQDN of Logstash - Replace

logstash_portwith the port Logstash uses to ingest Beats (default 5044)

- Replace

.\filebeat.exe modules enable osquery- Enable Osquery module

(Get-Content 'C:\Program Files\filebeat\module\osquery\result\manifest.yml').Replace('C:/ProgramData/osquery', 'C:/Program Files/osquery') | Set-Content 'C:\Program Files\filebeat\module\osquery\result\manifest.yml'- Replace OLD Osquery logging location with new location

powershell -Exec bypass -File .\install-service-filebeat.ps1- Install Filebeat service

Set-Service -Name "filebeat" -StartupType automaticStart-Service -Name "filebeat"Get-Service -Name "filebeat"

Ingest AuditD logs with Auditbeat on Ubuntu 20.04

Install/Setup AuditD on Ubuntu 20.04

apt update -y && apt upgrade -yapt install auditd -y- Install AuditD

wget https://raw.githubusercontent.com/Neo23x0/auditd/master/audit.rules -O /etc/audit/rules.d/audit.rules- Download open-source ruleset

systemctl restart auditd- Load rules

auditctl -l- List newly loaded rules

- List newly loaded rules

Install/Setup AuditBeat on Ubuntu 20.04

wget -qO - https://artifacts.elastic.co/GPG-KEY-elasticsearch | sudo apt-key add -- Add Elastic key

apt-get install apt-transport-https -yecho "deb https://artifacts.elastic.co/packages/7.x/apt stable main" | sudo tee /etc/apt/sources.list.d/elastic-7.x.list- Add Elastic repo

apt update -y && apt install auditbeat -y- Install AuditBeat

wget https://raw.githubusercontent.com/CptOfEvilMinions/ChooseYourSIEMAdventure/main/conf/auditbeat/auditbeat.yml -O /etc/auditbeat/auditbeat.ymlsed -i 's/{{ logstash_ip_addr }}/<IP addr of Graylog>/g' /etc/auditbeat/auditbeat.ymlsed -i 's/{{ logstash_port }}/<Port of Beats input - default 5044>/g' /etc/auditbeat/auditbeat.ymlsystemctl restart auditbeatsystemctl enable auditbeat

Create Graylog indexes

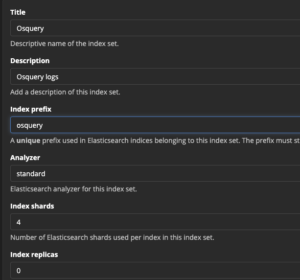

- System > Indicies

- Select “Create index set” in the top right

- Enter

Osqueryfor name - Enter

Osquery logsfor description - Enter

osqueryfor index prefix - Leave everything as default

- Select “Save”

- Enter

- Repeat the steps above for AuditD

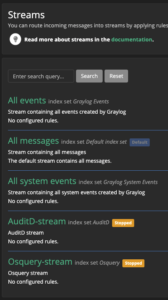

Create Graylog stream

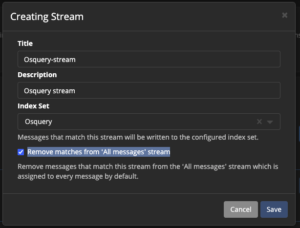

- Select “Streams” at the top

- Select “Create stream” in the top right

- Enter

Osquery-streamfor name - Enter

Osquery streamfor description - Select

Osqueryfor index set - Check “Remove matches from ‘All messages’ stream”

- Select “Save”

- Enter

- Repeat the steps above for AuditD

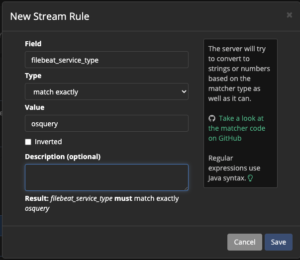

- Select “Manage Rules” for Osquery stream

- Select “Add stream rule” on the right

- Enter

filebeat_service_typefor field - Select

match exactlyfor type - Enter

osqueryfor value

- Select “Save”

- Enter

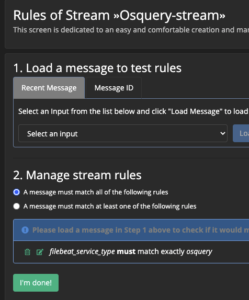

- Select “I’m done” in bottom left

- Select “Start stream” for Osquery stream

- Repeat the steps above for AuditD

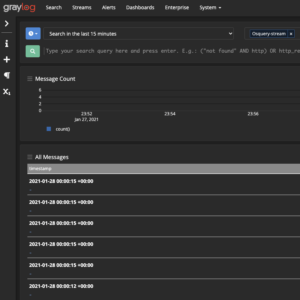

- Select “Search” at the top

- Enter

Osquery-stream into stream selector - Hit enter

Lessons learned

New skills/knowledge

- Learned Graylog 4.0 and 4.1

- Learned how to use the Graylog API

- Learned how to secure Mongo

- Implemented authentication on Elasticsearch

References

- Graylog – Reading individual configuration settings from files

- Graylog – Elasticsearch system requirements

- Github – Graylog – Elasticsearch 7 Support

- Github – jalogisch/d-gray-lab

- Dockerhub – Mongo

- MongoDB Server Parameters

- Mongo Authentication Examples

- Graylog REST API

- How To Install Graylog 3.0 on Ubuntu 18.04 / Ubuntu 16.04

- Install Elasticsearch with Debian Package

- MongoDB Setting up an admin and login as admin

- Install MongoDB Community Edition on Ubuntu