Just like my latest post on my logging pipeline, people want to know more about my Docker set up to learn from or replicate. This blog post is my attempt to share my Docker set up as a framework for newcomers. The hope is that the explanation of the architecture, design decisions, working infrastructure-as-code, and the knowledge I accumulated over the years will be beneficial to the community.

Goals

- Install Docker

- Setup Docker Swarm

- Connect macOS to remote Docker instance

- Setup Traefik as a reverse proxy

- Review methods to monitor Docker infrastructure

- Review some Docker pro tips and magic

- Docker secrets

- Docket networks

- Docker configs

ASSUMPTIONS

This blog post is written to be a proof of concept and not a comprehensive post. This post will NOT cover how Docker works therefore this post assumes you have some previous experience with this technology. Second, this blog post contains setups and configurations that may NOT be production-ready and meant to be proofs-of-concept (POCs).

ASSUMPTIONS

Background

What is Docker?

Docker is a tool designed to make it easier to create, deploy, and run applications by using containers. Containers allow a developer to package up an application with all of the parts it needs, such as libraries and other dependencies, and deploy it as one package. By doing so, thanks to the container, the developer can rest assured that the application will run on any other Linux machine regardless of any customized settings that machine might have that could differ from the machine used for writing and testing the code.

What is Docker Swarm?

A Docker Swarm is a group of either physical or virtual machines that are running the Docker application and that have been configured to join together in a cluster. Once a group of machines have been clustered together, you can still run the Docker commands that you’re used to, but they will now be carried out by the machines in your cluster. The activities of the cluster are controlled by a swarm manager, and machines that have joined the cluster are referred to as nodes.

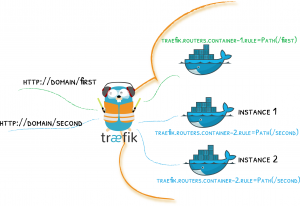

What is Traefik?

Traefik is an open-source Edge Router that makes publishing your services a fun and easy experience. It receives requests on behalf of your system and finds out which components are responsible for handling them. What sets Traefik apart, besides its many features, is that it automatically discovers the right configuration for your services. The magic happens when Traefik inspects your infrastructure, where it finds relevant information and discovers which service serves which request.

What is the monitoring stack?

In this blog, I review the monitoring stack that I utilize which is composed of cAdvsifor, InfluxDB, Prometheus, and Grafana. This monitoring stack provides a wide array of monitoring capabilities from monitoring individual Docker hosts, virtual machines, my pfSense router, and more. All of these technologies have pre-made dashboards that can be imported into Grafana to provide colorful metrics like the photo below.

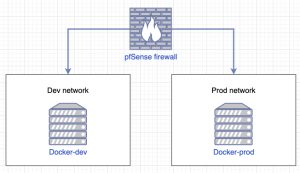

Network diagram

In my homelab network, I have a production (prod) network and development (dev) network. The production network contains services such as FreeIPA for LDAP + DNS, Gitlab for source code management + CI/CD, Traefik instance for HTTPS, APT-Cacher-NG for caching updates, and a Squid proxy for HTTP(S) egress. My production network is extremely restrictive on the ingress and egress traffic. Also, anything that isn’t essential WILL NEVER exist inside my production network. Next, I have my development network which is basically the exact opposite of the production network. My development network also has less restrictive firewall rules for easier development and testing of new things. These are the general principles I use in my network but more specifically with my Docker servers.

Install/Setup Docker on Ubuntu 18.04

Install/Setup Docker CE

- SSH into the VM for Docker

sudo suhostnamectl set-hostname <hostname>- I use

docker-devanddocker-prod

- I use

apt-get remove docker docker-engine docker.io -yapt-get update -y && sudo apt-get install apt-transport-https ca-certificates curl software-properties-common -ycurl -fsSL https://download.docker.com/linux/ubuntu/gpg | apt-key add –add-apt-repository "deb [arch=amd64] https://download.docker.com/linux/ubuntu $(lsb_release -cs) stable"apt-get update -yapt-get install docker-ce -yexitsudo usermod -aG docker ${USER}sudo systemctl start dockersudo systemctl enable docker

Init Docker Swarm

sudo docker swarm init --advertise-addr <IP addr of Docker>

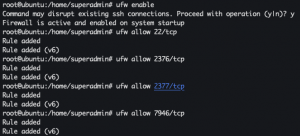

Setup UFW firewall

sudo suufw enableufw allow 22/tcpufw allow 2376/tcpufw allow 2377/tcpufw allow 7946/tcp

Connect to remote Docker instance from macOS

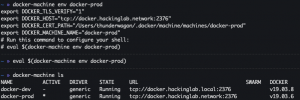

This is by far the BEST FEATURE of Docker I have discovered and it has completely changed my ENTIRE workflow. I give all the credit to this blog post for how to set this up. This feature allows you to run Docker commands and Docker compose files locally on your macOS machine but the actions happen on a remote Docker instance.

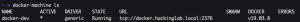

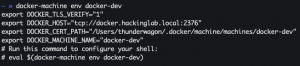

Setup remote docker-machine

docker-machine create --driver generic --generic-ip-address=<Docker IP addr/FQDN> --generic-ssh-key ~/.ssh/id_rsa --generic-ssh-user=<user with sudo privileges> <docker-machine alias name>

Connect to remote docker-machine

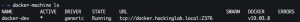

docker-machine ls

docker-machine env <docker-machine alias name>

eval $(docker-machine env <docker-machine alias name>)

Add remote docker-machine to .profile

echo 'eval $(docker-machine env <docker-machine alias name>)' > ~/.profilesource ~/.profledocker-machine ls

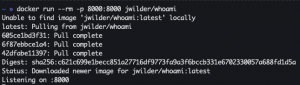

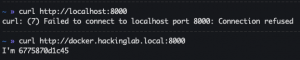

Docker whoami

docker run --rm -p 8000:8000 jwilder/whoami

- Open a new terminal tab

curl http://localhost:8000curl http://<Docker IP addr/FQDN>:8000

- Kill Docker container

Switching between Docker instances

docker-machine ls

docker-machine env <Docker machine name>eval $(docker-machine env <Docker machine name>)

Monitoring Docker infrastructure

Docker stats

A super simple way to monitor your infrastructure is to utilize the docker ps and docker stats commands. Very similar to the Linux command ps (even flags) is the docker ps command which will take a snapshot of all the Docker containers running on a system at a particular instance in time. This command is useful to see (screenshot below) if a container is running, what ports are exposed by a container, how long a container has been running, container ID, and container name. Very similar to the Linux command top command is the docker stats command which will show (screenshot below) a real-time feed of Docker containers such as container ID, container name, amount of CPU consumed by the container, amount of memory consumed by the container, high-level bandwidth consumption, and more. I personally use the docker stats command to specify the container’s resource limits but more on that later.

Now for years, I have used the commands above to manually monitor my Docker infrastructure but the more I relied on Docker for running services the more I found it was unfeasible to continue that, ergo cAdvisor, Grafana, and Prometheus. This monitoring stack provides a holistic approach to monitoring more than just your Docker infrastructure.

What is cAdvisor?

cAdvisor (Container Advisor) provides container users an understanding of the resource usage and performance characteristics of their running containers. It is a running daemon that collects, aggregates, processes, and exports information about running containers. Specifically, for each container it keeps resource isolation parameters, historical resource usage, histograms of complete historical resource usage and network statistics. This data is exported by container and machine-wide.

What is Prometheus?

Prometheus, a Cloud Native Computing Foundation project, is a systems and service monitoring system. It collects metrics from configured targets at given intervals, evaluates rule expressions, displays the results, and can trigger alerts if some condition is observed to be true.

What is Grafana?

Grafana allows you to query, visualize, alert on and understand your metrics no matter where they are stored. Create, explore, and share dashboards with your team and foster a data-driven culture:

- Visualize: Fast and flexible client side graphs with a multitude of options. Panel plugins for many different way to visualize metrics and logs.

- Dynamic Dashboards: Create dynamic & reusable dashboards with template variables that appear as dropdowns at the top of the dashboard.

- Explore Metrics: Explore your data through ad-hoc queries and dynamic drill down. Split view and compare different time ranges, queries and data sources side by side.

- Explore Logs: Experience the magic of switching from metrics to logs with preserved label filters. Quickly search through all your logs or streaming them live.

- Alerting: Visually define alert rules for your most important metrics. Grafana will continuously evaluate and send notifications to systems like Slack, PagerDuty, VictorOps, OpsGenie.

- Mixed Data Sources: Mix different data sources in the same graph! You can specify a data source on a per-query basis. This works for even custom data sources.

Spin up Traefik for HTTPS

Traefik is a very common Docker container that is used as an HTTP(s) reverse proxy and does auto-discovery of new containers. This auto-discovery feature monitors the Docker sock (/var/run/docker.sock) for the status of containers and will automatically adjust routes based on the container status. The only things that are required to make a container behind Trsefik routable are adding labels and adding the container to the Traefik network as seen below with Cyberchef container.

version: "3.3"

services:

cyberchef:

image: remnux/cyberchef:latest

container_name: cyberchef

restart: unless-stopped

networks:

- traefik-v3_traefik-net

labels:

- "traefik.enable=true"

- "traefik.http.routers.cyberchef.rule=Host(`cyberchef.hackinglab.local`)"

- "traefik.http.routers.cyberchef.tls=true"

networks:

traefik-v3_traefik-net:

external: true

This docker-compose has three labels that are used to instruct Traefik to route traffic for this Docker service. The first label (traefik.enable=true) instructs Traefik to act as a reverse proxy for the Docker service. The second label (traefik.http.routers.cyberchef.rule=Host(`cyberchef.<domain>`)) specifies the FQDN for the Docker service so Traefik knows where to route traffic based on specified HTTP Host header. The third label (traefik.http.routers.cyberchef.tls=true) instructs Traefik to use TLS for communication between the host and Traefik. Lastly, at the bottom, we specify the pre-existing Docker network (traefik-v3_traefik-net) and connect our Docker service to it.

Docker magic and pro-tips

PIN EVERYTHING!!!

Pinning Docker image versions is super important for operability. I have restarted a Docker stack with docker-compose and it downloaded the latest image which was not compatible with my config or a version of a service in my Docker stack. Pinning versions is an extremely good practice that everyone should do. So instead of doing image: mysql:latest or image: mysql (if you don’t specify a version it will default to latest) pin a version by doing image: mysql:5.7.

Docker-compose versions 2.X vs 3.X

At the top of docker-compose, you specify the version you want to use. While instinctively the higher number is usually newer, better, and has more features, that is not necessarily true. Docker-compose version 2.2 is for local deployments or single-node Docker servers NOT in Swarm mode. Docker-compose version 3.3+ is for production deployments or Docker server(s) in Swarm mode. Be mindful that each version has capabilities that are not necessarily replicated to the other version. For example, version 3.3 supports Docker secrets but version 2.2 doesn’t. Docker-compose version 2.2 supports a very minimal and clean way to specify the maximum number of resources (CPUs and memory) a container should be provisioned. For more information please refer to these documents:

My Docker-compose philosophy

My philosophy is that your Docker stack may need some initial configuration or generation of keys/certs but you should ONLY need to run docker-compose up to spin up your stack. I see blog posts and Docker setups all over the place that make you run additional commands in containers and additional steps to bring up the stack. My personal belief is that if you are doing this you are using Docker incorrectly OR you are attempting to use Docker in a way it was not designed to be used. NO, I will repeat NO, I do not want your MAKE file to startup the Docker stack, I just want docker-compose!

One common mistake I see with applications like Django is that you need you to run a separate command to initialize the database. I understand you can’t add this command to your Dockerfile because during the Docker build process it won’t spin up a database to run the command. I’ve seen entrypoint.sh scripts written to handle this issue or commands run in the Docker container after docker-compose up. I’m here to tell ya that is not necessary, perform the following steps:

docker-compose build <django app name>docker-compose run <django app name> <DB init command>docker-compose up -d

The command sequence above will build the Docker container for your Django app, it will temporarily spin up your stack (Django app and database) to run your command then spin down the stack, and lastly, spin up the stack with the DB inited.

Docker restart

Docker will monitor a container and if it crashes it will restart the container but the option you select is important. I see a lot of Github code have restart: always in the docker-compose which in my opinion is a super big nono. restart: always means that Docker will ALWAYS make sure the container is running even if you stop the container. I prefer to use restart: unless-stopped which means the container will run unless you issue the docker stop command or bring down the stack.

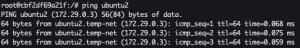

Docker networks

I’ll be honest explaining the concept of a Docker network is not easy so go here for more information if my explanation is not enough or see the examples below. Docker networks are another really cool feature of Docker and using them is good practice! Docker networks provide isolation and local DNS resolution based on container names. In the services section of the docker-compose.yml file you specify containers and the top YAML level of each service/container is its name. You can use that service name as a hostname to route traffic to that service. As seen in the first example below, we create a Docker network named temp-net and we can use the service/container names ubuntu1 and ubuntu2 to communicate. However, in the second example, if the containers are on different networks you can not communicate with it. The last example below demonstrates that a container can exist on multiple Docker networks and has the ability to communicate with containers on each network. Hopefully, the examples demonstrate the power of Docker networks.

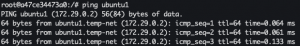

Same Docker network

docker network create temp-netdocker run -it --net=temp-net --name ubuntu1 ubuntu:18.04 bashapt-get update -y && apt-get install iputils-ping -yOpen a new terminaldocker run -it --net=temp-net --name ubuntu2 ubuntu:18.04 bashapt-get update -y && apt-get install iputils-ping -y- In Ubuntu1 terminal:

ping ubuntu2

- In Ubuntu2 terminal:

ping ubuntu1

- Exit both containers

docker network rm temp-net

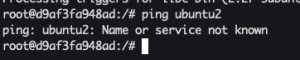

Isolated Docker network

docker network create temp-net1docker network create temp-net2docker run -it --net=temp-net1 --name ubuntu1 ubuntu:18.04 bashapt-get update -y && apt-get install iputils-ping -yOpen a new terminaldocker run -it --net=temp-net2 --name ubuntu2 ubuntu:18.04 bashapt-get update -y && apt-get install iputils-ping -y- In Ubuntu1 terminal:

ping ubuntu2

- In Ubuntu2 terminal:

ping ubuntu1

- Keep the networks and containers alive

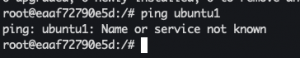

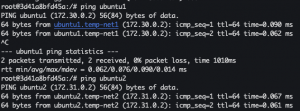

Multiple Docker networks

- Open a new terminal

docker run -it --net=temp-net1 --net=temp-net2 --name ubuntu3 ubuntu:18.04 bash- Open a new terminal

docker network connect temp-net2 ubuntu3- Close terminal

apt-get update -y && apt-get install iputils-ping -yping ubuntu2ping ubuntu1

Docker secrets

Docker secrets is another cool feature of Docker Swarm. Instead of hard coding secrets into your code, you can use Docker secrets. For example, in Python instead of hard coding an API key to a string, you instruct Python to read that secret from a file that is mounted by Docker at run time. Docker typically mounts secrets inside the container at the following location /run/secrets/<secret-name> and the secret is basically a text file with your API key.

echo "SUPER_SECRET_API_KEY" | docker secret create super_secret_api_key -docker secret ls

docker service create --name redis --secret super_secret_api_key redis:alpinedocker ps- Get Docker ID

docker exec -it <Docker container ID> shcat /run/secrets/super_secret_api_key

exitdocker service rm redisdocker secret rm super_secret_api_key

Docker configs

Docker configs are the exact same concept as Docker secrets above but used for configuration files. I have a base NGINX config that I use for all my NGINX setups. This base NGINX config (snippet below) ensures that all my NGINX web servers play by the same rules which are only allowing TLS v1.2+, only allow strong encryption ciphers, specify the location of the TLS certs which are Docker secrets, and the location to load additional configs located in /etc/nginx/conf.d/*.conf. Lastly in a production environment (env), it can be difficult to keep all your containers on a single config like this NGINX config. By creating a base config like below you can ensure that all the NGINX containers in your env are playing by the same rules.

http {

...

##

# SSL Settings

##

ssl_protocols TLSv1.2 TLSv1.3;

ssl_prefer_server_ciphers on;

ssl_ciphers "EECDH+AESGCM:EDH+AESGCM:AES256+EECDH:AES256+EDH";

ssl_ecdh_curve secp384r1;

# SSL cert

ssl_certificate /run/secrets/docker-cert;

ssl_certificate_key /run/secrets/docker-key;

ssl_dhparam /run/secrets/docker-dhparam;

##

# Virtual Host Configs

##

include /etc/nginx/conf.d/*.conf;

...

}

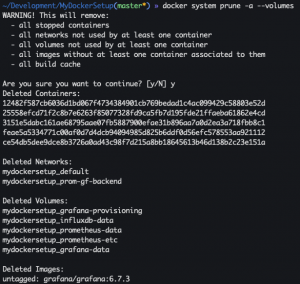

Docker system

docker system prune -a --volumes-a– Clean all unused Docker images--volumes– Clean all unused Docker volumes

docker system df

Discussion

Tags over separate instances

Some may argue that I keep my current setup but instead of having two separate Docker Swarms I combine them into one Swarm and utilize labels to designate the target node. I agree that is a viable option but for my workflow, I prefer having two separate systems. Also with my Docker Dev node, I just download and run any container I want to play with and there is a chance I could download a malicious container. My fear is that a malicious container could overtake the Docker Swarm, steal my Docker secrets, etc. While this scenario is probably unlikely, a more likely scenario is me forgetting to add/set the appropriate labels and I provision my Docker stack to the wrong instance. Again, I just prefer separate Docker Swarm nodes but feel free to do what you want in your own environment. Lastly, if my Docker dev node is infected by a malicious container I can destroy that VM and re-run all the docker-compose files.

Why not Kubernetes?

I’m still learning Kubernetes via Udemy courses but Kubernetes feels overwhelming and complicated for my homelab needs. With Docker, I probably use 80% of its functionality but with Kubernetes I feel like I am barely using 10% of its capabilities. It’s kinda like the argument for do you need to purchase Microsoft Office if Google Docs is free? There are arguments for each solution but if you only need a basic word processor then Microsoft Word is unnecessary, so Kubernetes is my Microsoft Word.

Docker-compose examples

I have compiled a Github repo containing example code of the thing discussed in this blog post.

https://github.com/CptOfEvilMinions/MyDockerSetup

Recommended Udemy classes

Lessons learned

I am currently reading a book called “Cracking the Coding Interview” and it is a great book. One interesting part of the book is their matrix to describe projects you worked on and the matrix contains the following sections which are: challenges, mistakes/failures, enjoyed, leadership, conflicts, and what you’d do differently. I am going to try and use this model at the end of my blog posts to summarize and reflect on the things I learn. I don’t blog to post things that I know, I blog to learn new things and to share the knowledge of my security research.

New skills/knowledge

- Learned Docker

- Learned good DevOp practices and hygiene

- Learned an orchestration tool

Challenges

- There’s a million ways to do things with Docker but a limited set of ways to do it right. The internet usually takes shortcuts instead of demonstrating better ways to do things.